“If you don’t make it accessible, it won’t be usable.”

Accessibility was in the air at Drupal GovCon 2023. The topic dominated during conference sessions and attendee conversations alike — not surprising, given its importance for municipalities and governments.

Users have the same expectations for government websites that they do for any other brand’s site: simple, secure, and accessible user experiences that render well on any device. Much of the content at this year’s GovCon focused on the knowledge, tools, and services government teams need to create accessible sites ( and the challenges that can arise along the way).

Over three days in Bethesda, Maryland, 600 designers, developers, and other Drupal pros working in government gathered to learn, improve, and innovate. We were lucky to be among them this year.

Here, we pull back the curtain on what we learned, who we met, and what we’re excited to put into action now that we’re home.

But First, Why Do We Attend Drupal GovCon?

Drupal GovCon combines two passions for Oomph: immersing ourselves in all things Drupal and helping our government clients create websites that are truly built for the people. We attended this year’s event to:

- Stay connected to the Drupal community. Oomph has been building in Drupal since 2008, and we love its philosophy of transparency and collaboration. The company stays active by attending events like DrupalCon, participating in its mentorship program, hosting local meetups and events, and contributing new features to Drupal’s ever-growing offerings.

- Get ideas and inspiration. We valued seeing how other developers use Drupal and speaking with those working in government about what a successful government-developer partnership looks like to them.

During the event, we networked with fellow developers, squeezed our way into plenty of jam-packed sessions, and presented our own session on accessibility audits. All the while, we looked for opportunities to build upon the Drupal work Oomph has already done for governments and municipalities like RI.gov.

Oomph Presents: The Many Shapes and Sizes of Accessibility Audits

“All websites are not created equal. And neither are accessibility audits.”

That’s our accessibility philosophy, and it’s also the opening line from our session, which was part of GovCon’s accessibility track. Many attendees hadn’t conducted an accessibility audit before, so this session focused on the basics: what to audit, what tools to use, and actionable deliverables digital teams can use for auditing and implementation.

Roughly 25 attendees joined to learn key considerations like:

- The components of an audit. Consider the people and the work. That is, who needs to support the audit (think website owner, development team, and more) and the work the audit will require (defining the scope, choosing the tools, and assigning a budget and timeline).

- Critical audit tools. The best tools for the job depend on your level of experience. We covered 22 of them and explained which tools to use if you’re a beginner, intermediate, or advanced developer/auditor.

- Inclusivity. Accessibility makes your website more usable, but it’s important to actively build experiences that include people of all abilities. The Voluntary Product Assessment Template (VPAT) keeps inclusivity front and center.

- Remediation. An audit is only effective if it’s actionable. We offered some tips for turning audit insights into a path to improvement.

To dive deeper, check out our roundup of accessibility resources here.

Our Must-Watch Drupal GovCon Sessions

Like all Drupal events, GovCon packed a lot of learning into just three days. While there were insights aplenty in every session we attended, these are the ones to watch from start to finish (or rewatch, in our case).

Automating Accessibility Testing With DubBot

This session was both pragmatic and inspiring. Presenter Jesse Dyck of Evolving Web started by reminding us all that accessibility is how we ensure truly equal access to information — a key tenet of government communications.

He then explained how tools like DubBot, an automated testing tool, can perform accessibility testing, provide quality assurance, and even take the pulse on your SEO efforts. Beyond the how-tos, Jesse also offered his own best practices for identifying and managing key data points.

Navigating the Digital Realm: A Journey Through the Eyes of a Screen Reader User

Amid all the tools and tech, it’s sometimes easy to forget that we’re innovating for people who use assistive devices to access critical information. Matthew Elefant, managing director at Inclusive Web, conducted a live demonstration of how blind and visually impaired people navigate the internet. The session brought a powerful human lens to the challenges people with disabilities face any time they get online when Chris, a tester working with Matthew, remotely walked through his experiences and challenges often including navigating websites with a screen reader.

Matthew reminded us that accessible websites aren’t necessarily usable and recommended some key opportunities to bridge that gap.

Editoria11y v2: Building a Drupal-Integrated Accessibility Checker

John Jameson designed Editoria11y v1 to meet an important Drupal need: a robust yet intuitive disability checker. During this session, John walked through his wishlist for Editoria11y v2 — check more complex HTML code and enable users to dismiss non-critical alerts, among others — and explained how he engineered each one (spoiler alert: a long list of mentors).

His session affirmed the innovative and supportive power of the Drupal community and taught us some of his most effective techniques for building complex web applications.

Honorable Mention: How AI Works and How We Can Leverage It

Artificial intelligence (AI) is the talk of the tech world, but it’s a bit of a sticky subject for governments and municipalities. Though AI can introduce efficiencies, it can also compromise compliance in the highly regulated public sector.

Michael Scmid offered some real-world ways to use AI, like training a language learning model (LLM) (think ChatGPT) or generating images — all with a focus on how those tools fit into a decoupled architecture like Drupal. This session is a great watch for anyone interested in understanding options for how to set up your own LLM.

Building Community at GovCon

Year after year, Drupal continues to shine because of the community it builds. At GovCon, we deepened long-held connections and formed some exciting new ones. We even got to meet one of our favorite contractors in person.

GovCon also echoed many of the conversations our Oomphers are already having. The conference’s timing couldn’t have been better: We brought many of the ideas shared to our recent Squad Summit (our “squads” are dedicated groups of designers, developers, and engineers who serve our clients). Together, our squad explored ways to incorporate emerging accessibility tools into our work and deepened our resolve to build a Web that works for everybody.

Let’s Talk GovCon

Attended GovCon and can’t get enough of the accessibility conversation? Couldn’t make it but want to brainstorm about how you can put these insights into action on your website?

ARTICLE AUTHORS

Kathy Beck

Senior UX Engineer

Hi, I’m Kathy, and I’ve been working with Drupal for over 10 years. As a UX Engineer here at Oomph, I am focused on front-end development and site building and I collaborate with the back-end engineering team and the design team. I am constantly thinking about accessibility and test solutions in multiple browsers as well as screen readers and automated tools.

Julie Elman

Senior Digital Project Manager

As a Digital Project Manager at Oomph, my main responsibilities include building and maintaining client relationships, managing projects, and collaborating with our talented development and creative teams to execute and deliver top-quality projects.

What’s been holding you back from migrating your website off of Drupal 7?

Maybe your brand is juggling other digital projects that have pushed your migration to the back burner. Maybe the platform has been working well enough that migration isn’t really on the radar. Or maybe (no shame here) you’ve been overwhelmed by such a massive undertaking and you’re feeling a little like Michael Scott:

“I don’t wanna work. I just want to bang on this mug all day.”

We get it. Whether you’re migrating to a new version of Drupal or a different platform, it’s a time-consuming process — which means now is definitely the time to get started.

While the Drupal Security Team recently announced it would extend security coverage for Drupal 7 from November 2023 to January 2025, those extra 14 months are ideally the time to plan and execute a thoughtful migration. Giving yourself ample time to plan for life after Drupal 7 is something Oomph has been recommending for a while now, and we’re here to help you through it.

3 Reasons To Start Your Drupal 7 Migration Now

1. Because Migration Takes Time

Migrating your site isn’t as simple as flipping a switch — and the more complex your site is, the more time it can take. Imagine two boats changing course in the water: It takes a massive container ship longer to turn than a small fishing boat. If your site is highly complex or has a lot of pages, it could easily take a year to fully migrate (not including the time it takes to select a partner to manage the process and kick off the work).

Even if your site isn’t so robust, you’ll do yourself a favor by building in a time buffer. Otherwise, you could risk facing a security gap if you run into complications that slow the process down. Some of the major factors that can impact timeline include:

- Developing a new site theme. The switch from the PHP Template Engine in Drupal 7 to Twig in Drupal 8 and above means you’ll need to rewrite your custom theme if you’re planning to upgrade to Drupal 10 — even if you’re not planning to redesign your site.

- Navigating custom code. If your current site relies on custom code, those modules will need special attention during the migration process.

- Migrating community-contributed modules. One of the best things about Drupal is the fact that it’s open-source, which means community members are constantly contributing new modules and features that anyone can use. While many Drupal 7 modules have a simple migration path to Drupal 10, not all do. Certain modules, including Organic Groups, Field Collections, and Panels, will need to be reviewed and migrated manually. Even if a module has a set migration path, it can be quicker to migrate them by hand (Webforms and Views are two good examples).

- Making other upgrades. You know the old saying, “Never let a good migration go to waste?” (OK, we made that up.) Still, a migration is a smart time to tackle other changes to your site, from updating your information architecture to modernizing your design. If you’re planning on a more holistic site update, there’s even more reason to start now to make sure your project is wrapped before the end of 2024.

2. Your Site Performance Is Less Than Ideal

Yes, Drupal 7 sites technically have security coverage until 2025. But if you’re still on Drupal 7, you’re missing out on the best that Drupal currently has to offer.

First, Drupal 7 is not fully compatible with PHP 8, a new and improved version of PHP that many websites are built on today. While Drupal 7’s core supports PHP 8, some contributed modules or themes on your site might not, which could create hiccups in your site performance.

In addition, the Drupal community is constantly putting out new features that aren’t available on Drupal 7. Some of the most exciting ones include:

- Layout builder, a visual design tool that makes it easier for content editors to build web pages and gives designers more room to flex their creative muscles.

- Workspaces, an experimental feature that allows users to stage content or preview a full site by using multiple workspaces on a single site.

- Better accessibility and responsive design to create genuinely useful experiences for all site users.

- API-first architecture and decoupled options to make it easier to integrate your site with any other tools or functionality you need.

- Twig, a templating language from Symfony that lets you write concise, readable templates that are more friendly to web designers.

- SaaS offerings like Acquia’s Site Studio to build beautiful pages more easily.

Sticking with Drupal 7 means not only missing out on this new functionality, but also on support from the Drupal community. Interest and activity from web devs on Drupal 7 continues to wane, which means your team may find it harder to get help from others to deal with bugs or other issues. You’re also likely to see fewer new features that are compatible with the older version – so while other sites can keep up with the evolving digital landscape, a Drupal 7 site is increasingly stuck in the past.

3. To Save Your Team’s Sanity

Odds are good that if your site is still running on Drupal 7, your team is already having trouble trying to make it work for your needs. Starting your migration now is key to getting your site running as smoothly as soon as possible — and sparing your team from unnecessary misery.

Consider these pain points and how your team can address them in your migration:

- Is your team frustrated with aspects of Drupal 7 that limit your site’s functionality?

- Is technical debt making your site maintenance more complicated, expensive, or both? Could it be affecting your hosting or vendor costs?

- Is your team struggling to find support or third-party integrations that work with the platform?

- Are they holding off on major new initiatives because the site can’t support them?

Options for Life After Drupal 7

Now that Drupal 7 is officially winding down, what’s next for your website? Deciding whether to go Drupal-to-Drupal, Drupal to another CMS, or a different route entirely depends on your technical needs and resources.

Drupal 10

If Drupal 7 has served your team well in the past, then Drupal 10 is the logical choice. The newest version of Drupal is ideal for more complex sites with extensive content modeling, varying user roles, and workflow requirements. To make things easier, you can leapfrog over Drupal 8 and 9 and migrate your Drupal 7 site directly to the latest and greatest version.

Many of our clients at Oomph are going this route, since Drupal 10 offers both a range of new features and familiarity for Drupal-versed teams to cut down on the post-migration learning curve.

WordPress or Another CMS

Not sure if Drupal 10 is the best fit? If your site is on the small side or if you don’t require lots of functionality, then Drupal may be more than you really require.

In that case, moving off of Drupal altogether might be in your best interests, helping you streamline your ongoing development needs and reduce maintenance and hosting costs. Here are a few alternatives for Drupal 7 users looking for a less robust platform without sacrificing a great web presence:

- WordPress

- Squarespace

- Wix

- Consider a headless CMS, supported by a decoupled framework such as Gatsby or Next.js.

An Internal Stopgap

Depending on your organization, now might not be a good time to migrate or rebuild your site. This is especially true if you’re already invested in an ongoing site redesign or rebuild. If you’re still trying to figure out your digital future, consider temporary measures you can take to stay protected once Drupal 7’s security coverage ends.

One possibility to consider is rolling up your site under another digital property in your organization. Even if it’s only an interim solution, it can help you buy time to make the best long-term plan for your website. Another option would be to develop a smaller static website with a refreshed design that would eventually be replaced with the upgraded CMS.

Tips for a Successful Migration

As your site’s technical foundation, Drupal delivers plenty of horsepower. However, the digital home you build on that foundation is what really counts. It’s crucially important to make sure all the pieces of your site work together as one — and a migration is a perfect opportunity to assess and optimize.

Over time, websites tend to accumulate “cruft” — the digital equivalent of dust and cobwebs. Cruft can take many forms: outdated, unnecessary, or poorly written code; deprecated site features; or obsolete or outdated content, files, and data. Whatever cruft exists on your site, migration is a chance to do some digital spring cleaning that can improve site performance and reduce maintenance time.

Beyond digital hygiene, evaluating each element of your site strategically can help you get the greatest business value from your migration.

- Information architecture: How easy is it to navigate around your site? Do your sitemap and information architecture still reflect your offerings and user needs accurately?

- Content: Does your site’s content still engage your audience and support business goals? Think about the impact of any changes to your information architecture: Would they require you to add, change, or remove content?

- Design: Will your existing site design work with the CMS you’ve chosen? Does it need updates to meet current standards for UX, accessibility, and responsiveness?

- Integrations: Should you add or remove any APIs or integrations? Keep in mind that many features not available in Drupal 7 are now built into the core of Drupal 10 or are available as contributed modules, so you may be able to optimize some of your site’s key features.

No matter what you plan to tackle alongside your migration, it’s a big project. An experienced guide can make all the difference. Our team of die-hard Drupal enthusiasts has led many Drupal-to-Drupal and replatforming projects for clients, including complex e-commerce and intranet sites. For us, a successful migration is one that’s grounded in strategy, follows technical best practices, and — most importantly — can support and evolve with your brand over time.

Need a hand deciding which route to take for your Drupal 7 migration? We’d love to talk.

When I first discovered Drupal, it was love at first click. I was fascinated by how easy it was to build powerful websites and digital platforms with its free, open source tools.

One of the things that attracted me to the Drupal community from the beginning was its commitment to giving back. Every line of code, module, and feature created with this open source content management system is available for anyone to use – and there’s nothing Drupal enthusiasts love more than turning other devs into Drupal converts. Drupal is a community-driven movement focused on ensuring that everyone can tap into its potential.

I’ve been involved with Drupal for 16 years (my experience can officially drive a car now!). Throughout the years, I’ve had the opportunity to attend DrupalCon and actively contribute to Drupal through my work at Oomph. As an agency, Oomph has given back to the community by sponsoring the annual New England Drupal Camp; hosting the monthly Providence Drupal Meetup; developing new modules like Oomph Paragraphs, Shared Field Display Settings, Layout Section Fields; and supporting the Talking Drupal Podcast.

On a personal level, I’ve always wanted to do more for Drupal, but it’s not always easy to find the time when you spend your days building digital platforms and your nights convincing your energetic 3-year-old to go to bed. While many people contribute their time and expertise by developing code or modules, finding the time for those contributions has been challenging for me.

In my search for ways to give back, I discovered mentoring. My decision to become a mentor was driven by a desire to share my knowledge and experiences with others and help them navigate the Drupal world.

I also had an ulterior motive: I want others to fall in love with open-source software just as I did. By fostering a passion for Drupal and open source in general, we can ensure our community continues to thrive and flourish. For those just beginning to explore open source, the Open Source Utopia Podcast and this talk from DrupalCon 2023 are great intros to the topic (warning: you might get hooked!).

Becoming a Drupal mentor has been an incredibly fulfilling experience, allowing me to give back without sacrificing work-life balance and witness the growth of aspiring web developers. If you’re a Drupal power user eager to give back or an emerging developer looking for mentoring support, I’ve got you covered. Here’s what I’ve learned over the first year of my mentoring journey. Drupal Easy

Drupal Easy is a platform that offers training programs for people who want to become Drupal developers. Their courses provide the knowledge and skills to excel in Drupal.

During their training, DrupalEasy students get helpful resources and a designated mentor (that could be you!) who acts as a direct line to all things Drupal. As a mentor, you’ll be there to help them overcome any challenges.

As part of my mentoring experience, I volunteered to help with the DrupalEasy program. It’s been satisfying to help students learn about the ins and outs of Drupal – and seeing their enthusiasm and talent makes me excited for the future of the community.

Discover Drupal

Discover Drupal is a fantastic initiative providing individuals from underrepresented groups with the opportunity to learn Drupal and kickstart their careers in web development.

The program guides users through various training programs, culminating in a trip to DrupalCon. It’s an incredible experience for these developers, as it feels like a graduation ceremony where they get to meet and connect with everyone in the Drupal community.

Something that sets Discover Drupal apart is the hands-on mentorship and education they provide for each student; weekly office hours bring students and mentors together in a collaborative environment, while regular workshops go deeper on specific areas students (and mentors) need to know. As a mentor, I supported and guided my mentee through the program, helping her succeed and nurturing her love for all things Drupal.

My mentee, Cindy Garcia, had been working in agencies doing WordPress development but found Drupal much more appealing. She resonated with Drupal’s mission and brought a unique perspective and passion to the community. (Fun fact: Cindy was a wrestling referee and an award-winning martial artist!)

We began our 1-1s by meeting twice weekly, gradually transitioning to once a week as Cindy grew more comfortable with Drupal. She drove the agenda, and I was there to help her solve any challenges she encountered. We started with the basics, such as making a view work within Drupal. I walked her through the process and guided her to ensure she grasped the concepts effectively.

As our mentoring relationship evolved, we delved into more complex technical topics. Cindy would come to me with specific questions about modules or code she was working on. It became a form of paired programming, where we would analyze her code together, troubleshooting and finding solutions collaboratively.

Most bootcamps are designed to teach you only basic concepts that can take years to master, says Cindy. But with Discover Drupal, Cindy was able to build four Drupal websites and had two contribution credits within a year.“I really felt the Discover Drupal program hit their benchmarks when it came to training new developers,” Cindy says. “It gave me a new career to look forward to and a great community to plug into when I am facing problems I can’t solve on my own.”

The most rewarding part of this mentoring experience for me? Meeting Cindy in person at DrupalCon and celebrating her achievements together. I can’t wait to watch how her career unfolds from here.

Volunteering at DrupalCon

In addition to meeting Cindy face to face, I also had the chance to give back at DrupalCon 2023.

Each year, DrupalCon hosts a “Contribution Day,” where attendees are invited to spend the day contributing their expertise — including coding, documentation, translation, graphic design, and more — to issues they’re passionate about. It’s a fantastic opportunity to contribute to the ever-expanding Drupal resource base in a meaningful way. True to its mission of inclusion, Drupal encourages first-time contributors to participate to ensure everyone has a chance to make a difference.

I had the chance to support the first-time contributors workshop, working alongside volunteers and other mentors to ideate and build in real time. We collaborated, shared knowledge, and helped newcomers navigate their first steps into the Drupal contribution space. The feeling of making a real impact and empowering others was so gratifying — I’m already eager to head back for DrupalCon 2024.

My Biggest Takeaway? There’s Room for Everyone in Drupal

As I transitioned from Drupal user to Drupal mentor this year, I couldn’t help but feel a sense of fear and uncertainty at first. I questioned whether I needed more knowledge or experience to make a meaningful contribution.

However, I quickly discovered everyone in the Drupal community is incredibly supportive and genuinely wants to help others succeed. I’m so glad I didn’t let self-doubt hold me back.

Mentoring is not about having all the answers or being the smartest person in the room. It’s about sharing your experiences, insights, and guiding others through their Drupal journey. By offering support and encouragement, you can have a powerful effect on someone’s career trajectory.

The Drupal community is built on collaboration and mutual support. We’re all on this journey together, constantly learning and evolving.

If you’re interested in getting involved, check out Drupal’s resources on mentoring with Drupal Easy and Discover Drupal,. Hope to see you out there on the mentoring journey!

We are thrilled to share that our client, Lifespan, has been named to the Nielsen Norman Group 2023 list of the ten best employee intranets in the world. Award winners are recognized worldwide for their leadership in defining the field of UX. NN/g is dedicated to improving the everyday experience of using technology. The company has evaluated thousands of websites and applications and consulted for leading brands in virtually every industry since 2001 to select the 10 best intranets annually.

A Collaborative Process

Lifespan collaborated with our team on strategy, stakeholder management, UX research, UI design, and development. We developed the intranet’s information architecture and prototyped and tested tablet versions of the mobile intranet. Our engineering team conducted a technical discovery and completed the full intranet development, which included the intranet’s custom features and integrations. The result was an intranet that met employees’ personal needs while building a sense of community across Lifespan’s large organization.

“It’s wonderful to see the culmination of so much research, feedback, conversation, and collaboration be recognized and placed among some of the best brands in the world,” said Oomph’s Director of Design & UX, J. Hogue. “This intranet required 18 months of employee-focused strategy, research, design, testing, and development with the latest technology, security best practices, and accessibility design. The result supports employees and positive patient outcomes across the hospital system. We are intensely proud of the tailored approach the teams used to create a digital experience that reflects Lifespan’s company culture.”

Helping to Connect a Remote Workforce

Lifespan is a digital workplace, and the intranet is the hub that connects employees to the hundreds of digital tools and resources they need to deliver health with care every day. Most of Lifespan’s 16,000+ employees use the intranet on a daily basis to complete their work tasks, find information about benefits, and/or read the latest news. The intranet routinely sees more than 1M page views each month. Physicians, nurses, allied professionals, and clinical support staff often use the intranet to access policies and job tools that are critical for patient care and often needed immediately. Administrative support staff rely on the intranet to access information and third-party tools that are critical to such business operations as purchasing, finance, materials management/supply chain operations, and facilities maintenance to name a few. For all users, the intranet is a central hub for department information, professional education and training, news and events, the staff directory, HR and payroll information, digital tools request services throughout the organization (both clinical and administrative), and remote access to email. Most importantly, the intranet provides a place where employees can learn what’s happening across the Lifespan system and at each individual affiliate location.

“The team responded to the importance of communication and connectedness and used those themes as the guiding strategy when redesigning the intranet. They made it more accessible, user-friendly, and contemporary, thanks to their vision, planning, and execution,” said Lifespan Senior Vice President, Marketing and Communications, Jane Bruno. “Winning this award is a testament to the hard work of Lifespan’s marketing and communications and information services teams, and their collaboration with Lifespan’s digital design and development partner, Oomph.”

There’s no doubt that all of us at Oomph are extremely proud of the outcome as well and it’s even more gratifying to work side-by-side with an organization that’s so committed to improving the employee experience. After an award-winning collaboration like this, we look forward to continuing our partnership in the years to come.

More information about the 2023 winners is on the NN/g website. The winning intranets are also featured in the NN/g’s publication, Intranet Design Annual 2023: Year’s 10 Best Intranets. The publication includes a detailed case study on Lifespan’s intranet project and the vision, working methods, and management strategies underpinning its success.

Past recipients of the top 10 intranet award include BNY Mellon, Korn Ferry, The United Nations, Barclays, 3M, The Estée Lauder Companies Inc., International Business Machines Corporation (IBM), Princeton University, and JetBlue.

Interested in learning more about Oomph’s award-winning work? Take a look at some of our favorite projects and see how we make a difference for clients nationwide.

The full press release can be found at: https://www.lifespan.org/news/lifespan-named-top-10-best-intranets-world-nielsen-norman-group-nng

For digital ecosystem builders like us, DrupalCon is kind of like our Super Bowl: best-in-class web devs coming together to level up our Drupal prowess.

Earlier this summer, we joined 1,800 other Drupal users in Pittsburgh, turning the David L. Lawrence Convention Center into a four-day meeting of the minds for anyone who builds with Drupal.

Why DrupalCon? Well, we’re huge fans of the platform. We’ve been developing Drupal projects since 2008 and have served the Drupal community by sponsoring the annual New England Drupal Camp; hosting the monthly Providence Drupal Meetup; developing new modules like Oomph Paragraphs, Ooyala, and Getty Images; and supporting the Talking Drupal Podcast.

That’s why, for us, DrupalCon has become an in-person way to validate the work we’re proud to do online. This year (our fourth!), Oomph sent four team members to host our booth in the Expo Center and soak up the positive energy and game-changing innovation DrupalCon is known for.

Here’s what we saw, what we learned, and what we can’t wait to start applying now that we’re back at work.

Our Notes on DriesNote

“Pittsburgh is a city where things are made. A little like Drupal.”

That’s from this year’s DriesNote, the annual speech Drupal founder Dries Buytaert delivers to kick off DrupalCon and shed light on what he sees as the biggest challenges and opportunities facing developers.

This year’s call to action? Innovate. Think beyond what’s possible to create bigger and better software solutions. Find new and interesting ways to power digital experiences with Drupal. And embrace revolutionary technology like Drupal’s new ChatGPT integration and the soon-to-launch Drupal 10.1.0.

Dries also presented the entrants for the 2023 Pitch-burgh Innovation Contest — an opportunity for Drupal users to win funding for their unique ideas. A panel of judges selected seven finalists, then the audience chose five winners. Drupal awarded a collective $108,000 to fund their submissions.

Watch DriesNote 2023 on YouTube

Session Takeaways

After DriesNote, we squeezed our way into a few (literally and figuratively) jam-packed sessions focused on tech, UX, design, and so much more. Here are the top takeaways from the sessions we attended.

1. The future of Drupal could be headless.

Josh Koenig, CEO and co-founder at Pantheon, led a session about Javascript and the future of Drupal, offering his hot takes on where Drupal has been and where it may need to go to remain successful.

He noted that while Drupal didn’t start out as enterprise software, that’s exactly what it has become. Yet, there’s some work to do for Drupal to seamlessly integrate into the enterprise space.

According to Josh, decoupled and headless CMS solutions are the future of enterprise software, while new developers entering the workforce are focusing more on modern Javascript skills and less on PHP and TWIG. At the same time, current administrator interfaces in the headless space are too rudimentary, decoupled solutions are too complex, and widespread adoption of a single open-source, headless CMS hasn’t happened yet.

These are all gaps Drupal and its users could look to fill.

2. User personas are (mostly) out. Mindset-focused design is in.

“Death to Personas” was the title of one of our favorite sessions, led by Mediacurrent Head of Product Elliott Mower. Now, before we go on, let’s get this out of the way: We like personas at Oomph. And many of the audience members at this session agreed.

Yet, Elliott suggested there’s a different — and, perhaps, better — way to design inclusive web experiences. He believes that user personas are a hangover from the days when software for non-developers was still cutting-edge. Personas can also be hyper-specific or over-generalized, neither of which lend themselves to accessible and impactful user experiences.

After all, your customers’ needs aren’t a number; they’re the product of complex driving forces that can’t be distilled into a set of demographics. Elliot encouraged the audience to instead think qualitatively — specifically about the customer’s mindset — to build more meaningful customer experiences.

Watch the full Death to Personas session on YouTube

3. Authenticity is the foundation for long-lasting client relationships.

In a world where everyone is serving someone — clients, bosses, coworkers — how do you create relationships with staying power? Authenticity.

Palantir.net Customer Success Manager Britany Acre spoke about how the days of emotionless professionalism are over. People want to be humanized, to be seen for the whole person they are both within and outside of the workplace.

Communicating authentically, showing concern, and even sharing when we’re having a bad day can, according to Britany, foster the trust that drives genuine partnership.

Watch the full Authenticity is Contagious session on YouTube

Welcoming Women in Tech

Recent research shows that women make up only 25% of workers in the technology sector. Jess Romeo, Director of Web Publishing Platforms at Pfizer, offered reasons for and solutions to this disparity during her Women in Tech keynote.

Jess said many women wonder if they need a computer science degree and don’t know what opportunities for advancement exist in the field. Many women also feel the pressure to put work before their family — an expectation that’s slowly but surely dying out.

She also offered these words of encouragement for women working toward a career in tech:

- The “rules” of the tech industry have dramatically changed since COVID-19

- Women are welcome in tech, more than ever before

- There has never been more awareness and action on diversity and inclusion

Watch the full Women in Tech keynote on YouTube

Birds of a Feather Sessions

DrupalCon’s Birds of a Feather (BoF) sessions are an opportunity to network and collaborate with like-minded people. These informal gatherings feature open discussions about Drupal-related topics, like technical issues and business challenges.

People love BoF because they’re more of-the-moment, usually organized while DrupalCon is in full swing. This year, organizers accepted ideas in advance, and we appreciated the opportunity to have more structured and thoughtful conversations.

For us, the BoF sessions are also a chance to share our skills and perspective with newer Drupal builders. Because of our involvement in the Drupal Scholarship Program, our lead back-end engineer, Phil, also had the opportunity to mentor up-and-coming engineers during the sessions.

Getting Social

Oomph just wants to have fu-un. (Get the reference?).

It’s true, though! As much as we worked, we played. We took our team out to a Pittsburgh Pirates Monday night ball game and watched the Pirates take home a 5-4 win over the Oakland Athletics.

During what little spare time we had, we also connected with old friends — and made new ones — at the Pantheon and Acquia-hosted special events.

DrupalCon Swag

Did you even go to a conference if you came home without swag?

Our favorite was the plushy Drupal droplets at the Northern Commerce booth, a nod to the Dutch word “droppel,” which translates to “drop” in English and is Drupal’s namesake.

Pantheon also gave away t-shirts (with four different design options) that our team will be repping back at Oomph HQ.

As always, DrupalCon left us energized and excited to continue contributing to the future of web dev. We’re already looking forward to 2024!

THE BRIEF

Connecting People and Planet

NEEF’s website is the gateway that connects its audiences to a vast array of learning experiences – but its existing platform was falling short. The organization needed more visually interesting resources and content, but it also knew its legacy Drupal site couldn’t keep up.

NEEF wanted to build a more powerful platform that could seamlessly:

- Communicate its mission and showcase its impact to inspire potential funders

- Broaden its audience reach through enhanced accessibility, content, and SEO

- Be a valuable resource by providing useful and engaging content, maps, toolkits, and online courses

- Build relationships by engaging users on the front end with easy-to-use content, then seamlessly channeling that data into back-end functionality for user-based tracking

THE APPROACH

Strategy is the foundation for effective digital experiences and the intuitive designs they require. Oomph first honed in on NEEF’s key goals, then implemented a plan to meet them: leveraging existing features that work, adding critical front- and back-end capabilities, and packaging it all in an engaging, user-centric new website.

Information architecture is at the core of user experience (UX). We focused on organizing NEEF’s information to make it more accessible and appealing to its core audiences: educators, conservationists, nonprofits, and partners. Our designers then transformed that strategy into strategic wireframes and dynamic designs, all of which we developed into a custom Drupal site.

The New NEEF: User-Centered Design

A Custom Site To Fuel Connection

NEEF needed a digital platform as unique as its organization, which is why Oomph ultimately delivered a suite of custom components designed to accommodate a variety of content needs.

Engaging and thoughtful design

NEEF’s new user experience is simple and streamlined. Visual cues aid in wayfinding (all Explore pages follow the same hero structure, for example), while imagery, micro-interactions (such as hover effects) and a bold color palette draw the user in. The UX also emphasizes accessibility and inclusivity; the high contrast between the font colors and the background make the website more readable for people with visual impairments, while people with different skin tones can now see themselves represented in NEEF’s new library of 100 custom icons.

Topic-based browsing

From water conservation to climate change, visitors often come to the NEEF site to learn about a specific subject. We overhauled NEEF’s existing site map to include topic-based browsing, with pages that roll resources, storytelling, and NEEF’s impact into one cohesive package. Additional links in the footer also make it easier for specific audiences to find information, such as nonprofits seeking grants or teachers looking for educational materials.

NPLD-hosted resources and event locator

Oomph refreshed existing components and added new ones to support one of NEEF’s flagship programs, National Public Lands Day (NPLD). People interested in hosting an event could use the new components to easily set one up, have their own dashboard to manage, and add their event to NEEF’s event locator. Once the event has passed, it’s automatically unlisted from the locator — but archived so hosts can duplicate and relaunch the event in future years.

THE RESULTS

Protecting the Planet, One User at a Time

Oomph helped NEEF launch its beautiful, engaging, and interactive site in May 2023. Within three months, NEEF’s team had built more than 100 new landing pages using the new component library, furthering its goal to build deeper connections with its audiences.

As NEEF’s digital presence continues to grow, so will its impact — all with the new custom site as its foundation.

Among enterprise-scale organizations, from healthcare to government to higher education, we’ve seen many content owners longing for a faster, easier way to manage content-rich websites. While consumer-level content platforms like Squarespace or Wix make it easy to assemble web pages in minutes, most enterprise-level platforms prioritize content governance, stability, and security over ease of use.

Which is a nice way of saying, sometimes building a new page is as much fun as getting a root canal.

That’s why we’re excited about Site Studio, a robust page-building tool from our partners at Acquia. Site Studio makes content editing on Drupal websites faster and more cost-effective, while making it easy for non-technical users to create beautiful, brand-compliant content.

In this article, we’ll explain what Site Studio is, why you might want it for your next Drupal project, and a few cautions to consider.

What is Site Studio?

Formerly known as Cohesion, Site Studio is a low-code visual site builder for Drupal that makes it easy to create rich, component-based pages without writing code in PHP, HTML, or CSS. Essentially, it’s a more feature-rich alternative to Drupal’s native design tool, Layout Builder.

How does Site Studio work? Site developers lay the groundwork by building a component library and reusable templates with brand-approved design elements, such as hero banners, article cards, photo grids, buttons, layouts, and more. They can either create custom components or customize existing components from a built-in UI kit.

Content editors, marketers, and other non-technical folks can then create content directly in the front end of the website, using a drag-and-drop visual page builder with a full WYSIWYG interface and real-time previews.

Who is Site Studio For?

In our experience, the businesses that benefit most from a powerful tool like Site Studio tend to be enterprise-level organizations with content-rich websites — especially those that own multiple sites, like colleges and universities.

Within those organizations, there are a number of roles that can leverage this tool:

Content owners

With Site Studio, marketers and content editors can browse to any web page they want to update, and edit both the content and settings directly on the page. Rewriting a header, swapping an image with a text box, or rearranging a layout can be done in just seconds.

Site builders

Using Drupal’s site configuration interfaces and Site Studio’s theming tools, site builders can easily create Drupal websites end-to-end, establishing everything from the information architecture to the content editing experience.

Brand managers

Managers can define site wide elements, like headers and footers or page templates, to ensure that an organization’s branding and design preferences are carried out. They can also create sub-brand versions of websites that have unique styles alongside consistent brand elements.

IT and web teams

By putting content creation and updates in the hands of content authors, Site Studio frees up developers to work on more critical projects. In addition, new developers don’t need to have expert-level Drupal theming experience, because Site Studio takes care of the heavy lifting.

What Can You Do With Site Studio?

Site Studio makes it easy to create and manage web content with impressive flexibility, giving content owners greater control over their websites without risking quality or functionality. Here’s how.

Go to market faster.

Site Studio’s low-code nature and library of reusable components (the building blocks of a website) speeds up both site development and content creation. Creators can quickly assemble content-rich pages, while developers can easily synchronize brand styles, components, and templates.

Site Studio provides a UI Kit with around 50 predefined components, like Text, Image, Slider, Accordion, etc… Developers can also build custom components. Change any component in the library, and all instances of that component will update automatically. You can also save layout compositions as reusable ”helpers” to streamline page creation.

Build beautiful pages easily.

While we love the power and versatility of Drupal, its page building function has never been as user-friendly as, say, WordPress. Site Studio’s Visual Page Builder brings the ease of consumer-level platforms to the enterprise website world.

This intuitive, drag-and-drop interface lets users add or rewrite text, update layouts, and change fonts, styles, colors, or images without any technical help. And, it’s easy to create new pages using components or page templates from the asset library.

Ensure brand consistency.

With Site Studio, you can define standards for visual styles and UI elements at the component level. This provides guardrails for both front-end developers and content creators, who draw on the component library to build new pages. In addition, Site Studio’s import and sync capabilities make it easy to enforce brand consistency across multiple sites.

Get the best out of Drupal.

Because Site Studio is designed exclusively for Drupal, it supports many of Drupal’s core features. With Site Studio’s component library, for instance, you can create templates for core content types in Drupal. Site Studio also supports a number of contributed content modules (created by Drupal’s open-source community), so developers can add additional features that are compatible with Site Studio’s interface.

What Are Some Limitations of Using Site Studio?

There’s no doubt Site Studio makes life easier for everyone from marketers to web teams. But there are a few things to consider, in terms of resource costs and potential risks.

Start from the ground up.

To ensure the best experience, Site Studio should be involved in almost all areas of your website. Unlike other contributed modules, it’s not a simple add-on — plan on it being the core of your Drupal site’s architecture.

This will let you make decisions based on how Site Studio prefers a feature to be implemented, rather than bending Drupal to fit your needs (as is often the case). Staying within Site Studio’s guardrails will make development easier and faster.

Be careful with custom components.

With its recent Custom Components feature, Site Studio does let developers create components using their preferred code instead of its low-code tools. So, you can create a level of custom functionality, but you must work within Site Studio’s architecture (and add development time and cost).

If you decide instead that for a given content type, you’re going to sidestep Site Studio and build something custom, you’ll lose access to all its components and templates — not to mention having to manage content in different systems, and pay for the custom development.

Rolling back changes is tough.

A standard Drupal site has two underlying building blocks: database and code. Drupal uses the code (written by developers) to carry out functions with the database.

When a developer changes, say, the HTML code for a blog title, the change happens in the code, not the database. If that change happened to break the page style, you could roll back the change by reverting to the previous code. In addition, most developers test changes first in a sandbox-type environment before deploying them to the live website.

By contrast, with Site Studio, most changes happen exclusively in the database and are deployed via configuration. This presents a few areas of caution:

- Users with the correct permissions can override configuration on a live site, which could impact site functionality.

- Database changes can have far-reaching impacts. If you have to roll back the database to fix a problem, you’ll lose any content changes that were made since the last backup.

That’s why Site Studio requires meticulous QA and careful user permissioning to prevent inadvertent changes that affect site functionality.

One Last Thing: You Still Need Developers

While it’s true that just about anyone in your organization can create pages with Site Studio’s intuitive interface, there are still aspects of building and maintaining a Drupal website that require a developer. Those steps include:

- Setup and implementation of Site Studio,

- Building reusable components and templates, and

- Back-end maintenance (like updates, compliance, and security).

However, once the components have been built, it’s easy for non-technical content owners to create beautiful pages. In the end, you’ll be able to launch websites and pages faster — with the creativity and consistent identity your brand deserves.

Interested in learning whether Site Studio is a good fit for your Drupal website? Contact us for more info.

We are thrilled to share that Oomph has been recognized as an Acquia Certified Drupal Cloud Practice for completing Acquia’s rigorous evaluation program, which recognizes the highest standards of technical delivery on the platform.

To earn Drupal Cloud Practice Certification, Acquia partners must meet a stringent set of technical criteria. These requirements include a core team of Acquia certified developers, significant hands-on experience delivering Acquia Drupal Cloud products to clients, and a meticulous company review with Acquia partner specialists.

“I am incredibly proud that our team has achieved this Acquia Practice Certification” said Christopher Murray, CEO at Oomph. “We have a long history of delivering impactful client solutions around Drupal and Acquia and we are passionate and excited about extending our work within the Acquia ecosystem.”

The Acquia Practice Certification Program rewards partners who demonstrate a mastery of Acquia’s Cloud Platform in three separate areas: Drupal Cloud, Marketing Cloud and DXP. These certifications are awarded to organizations with a proven record of technical achievement, and a commitment to driving transformative business engagements on the Acquia Platform.

As a Certified Drupal Cloud Practice, Oomph receives the benefits of a deeper working relationship with Acquia, and heightened visibility as a trusted technical partner.

“We’re proud to recognize Oomph as a certified Drupal Cloud Practice,” said Mark Royko, Director of Practice Development at Acquia. “At Acquia, we continually strive to serve more customers while helping our valued partners grow their businesses. With Drupal Cloud certification, we know we can count on partners like Oomph to help us reach those goals.”

This honor is one of several Acquia accolades that Oomph has achieved since becoming an Acquia Partner in 2012. This year Oomph won a 2022 Acquia Engage Award for our work designing and building the platform that powers many of the websites operated by the state of Rhode Island.

“These certifications are awarded to organizations with a proven record of technical achievement, and a commitment to driving transformative business engagements on the Acquia Platform. It helps more customers realize the tremendous value of working with Acquia’s Drupal Cloud.”

— Christopher Murray, CEO

We’re always looking to expand our Acquia knowledge to help our partners make the most of their Drupal websites. Our team leads the way on emerging Drupal practices so we can advise our clients how to build innovative websites that help them forge the best connections with their audiences.

As an Acquia Partner we are excited to help you deliver great Drupal experiences. Start by reaching out. Contact us today to connect with an expert!

Ajob well done, as they say, is its own reward. At Oomph, we believe that’s true. But on the other hand, awards are pretty nice, too. And we’ve just won a big one: Oomph was named a winner of a 2022 Engage Award by digital platform experience provider Acquia for our work designing and building the platform that powers many of the websites operated by the state of Rhode Island.

Oomph won Leader of the Pack – Public Sector, in the Doers category, recognizing organizations that are setting the bar for digital experiences in their field or industry.

The award-winning project (see more in this case study) began with the goal of creating a new design system for the public-facing sites of Rhode Island’s government organizations, such as the governor’s office and the Office of Housing and Community Development. Oomph’s work on the sites, which began launching in December 2020, helped Rhode Island improve communications by developing common design elements and styles that appeared consistently across all the websites and by providing each agency the same tools and capabilities to keep their sites up to date and functioning at their best.

We’d Like to Thank…

The appreciation for the project has been especially satisfying for Rhode Island ETSS Web Services Manager Robert W. Martin, who oversaw it on the client side. “We are extremely proud to receive such recognition,” he says. “This accomplishment could not have happened without the public-private partnership between the State of Rhode Island, NIC RI, Oomph, and Acquia. Through the Oomph team’s high level of engagement, we were able to deliver the entire platform on time, on quality, and on budget, all during a global pandemic. A truly remarkable achievement!”

Folks have been excited on the Oomph side as well. “It’s an honor, really, and recognition of an excellent team effort,” says Jack Hartman, head of delivery with Oomph. “We went into this project knowing we were introducing the team to powerful new technologies such as Acquia Site Factory. Our successful delivery of the new platform for Rhode Island is a testament to Acquia feeling that we’ve not only utilized their services, but modeled how they feel their products can best be put to use in bringing value to their clients.”

A Remarkable Collaboration

Martin is quick to credit the project’s success to the Oomph team’s hard work, expertise and commitment to collaboration. He explains: “We were always impressed with Oomph’s breadth of technical knowledge and welcomed their UX expertise. However, what stood out the most to me was the great synergy that our teams developed.”

In keeping with that spirit of synergy, Hartman also is proud of how the teams came together to get the job done. “We’re all quite transparent communicators and effective collaborators. Those two attributes allowed us to successfully navigate the vast number of stakeholders and agency partners that were involved from the beginning to the end,” he says.

Planning for the Unplannable

A particular challenge no one foresaw in the early stages of the project was the COVID-19 pandemic. Though it wasn’t part of the initial strategy, the team quickly realized that due to COVID-19, they needed to change course and focus on COVID-related sites first, which made it possible to tap into COVID relief funding for the project overall.

“Our first site to roll out on the new platform was Rhode Island’s COVID-19 response communication hub,” says Hartman. “It added an extra layer of both seriousness and excitement knowing that we were building a critical resource for the people of Rhode Island as they navigated a really unpredictable pandemic scenario.”

The Future of Digital Infrastructure

Of course, even with the added responsibility and challenge of a global pandemic, this project already had plenty of excitement and investment from the Oomph team. “It’s not often that a team’s full time and focus is secured to work on a single initiative, and this engagement was very much that,” Hartman says. “A group of highly skilled craftspeople with the singular focus of laying the groundwork for the future of a state’s digital infrastructure.”

Of course, all the internal excitement in the world doesn’t count for much if the end product doesn’t land with its audience, and in this case, the new sites were a resounding success. As the sites launched, they quickly met with approval from those who use the sites most: Rhode Island’s government agencies and the state’s citizens.

“Agencies have been extremely enthusiastic and excited for both the design and functionality of their new sites,” Martin says. “Some of the most common points of appreciation included the availability of content authoring and workflow approvals, flexible layout and content components, multiple color themes, support for ‘dark mode’ and other user customizations, multiple language support, system-wide notification alert capability, and a wide range of other features all available without the need for custom coding or advanced technical knowledge.”

According to Martin, the platform has been a hit with Rhode Island’s people as well. He says, “We have received very positive, unsolicited feedback from citizens around the state regarding their new RI State government websites.”

He adds, “Being named the winner of the Leader of the Pack – Public Sector in the 2022 Acquia Engage Awards is proof positive that we’re on track towards achieving our service delivery goals.”

One More Trophy for the Case

Of course, with a big project like this, there’s always plenty to learn. Hartman points to the process of migrating the state’s vast trove of legacy content to the new sites as a key area that will inform future client work.

But enough lessons: for now, the team is celebrating the big win.

“Our fearless leader Chris Murray attended the awards ceremony in Miami,” Hartman says. “We have an awards wall at the office where we display everything we’ve won from design accolades to this prestigious award. I have no doubt the Acquia Award will be there the next time I visit the office.”

And after redesigning the entire web presence for Rhode Island’s many state agencies and completely revamping how the state communicates with its citizens, surely the Oomph team are now honorary members of Rhode Island’s government?

“Absolutely,” Hartman laughs. “And as my first initiative as a member of this great state’s government, I’ll be bringing the PawSox back to the Bucket!”

Interested in learning more about Oomph’s award-winning work? We don’t blame you. View more of our case studies to see how we make a difference for clients nationwide.

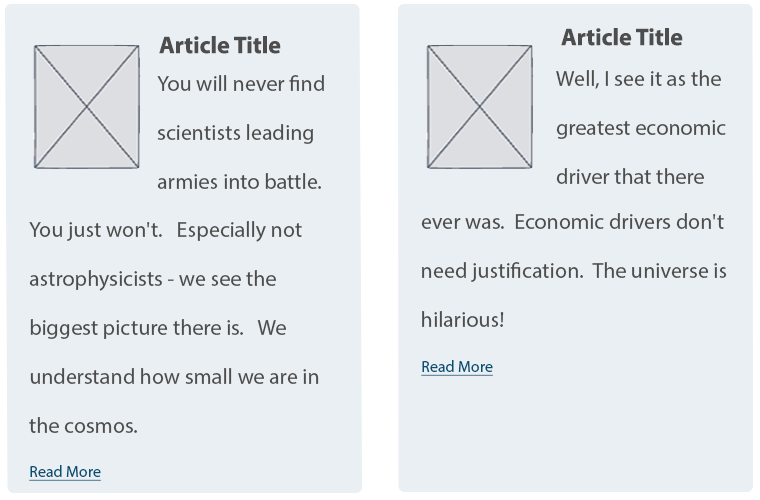

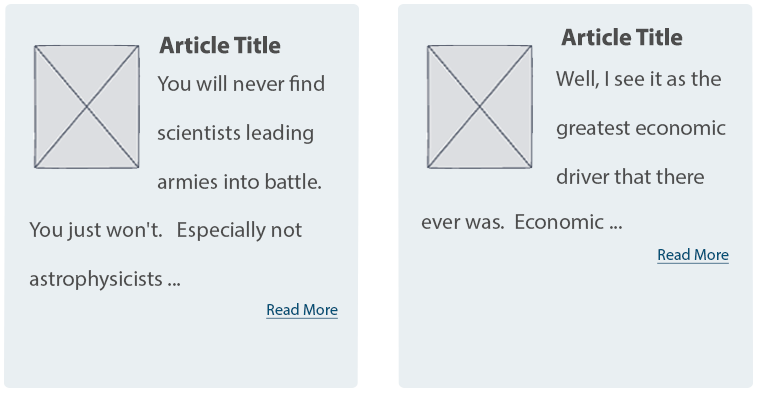

A “Read More” Teaser is still a common UX pattern; it contains a title, often a summary, sometimes a date or author info, and, of course, a Read More link. The link text itself may differ — “read more”, “learn more”, “go to article”, and so on — but the function is the same: tell the user that what they see is only a preview, and link them to the full content.

In 2022, I’d argue that “Read More” links on Teaser components are more of a design feature than a functional requirement; they add a visual call to action to a list of Teasers or Cards, but they aren’t necessary for navigation or clarity.

Although I see fewer sites using this pattern recently, it’s still prevalent enough on the web and in UX designs to justify discussing it.

If you build sites with Drupal, you have a few simple options to implement a Read More Teaser. Both methods have some drawbacks, and both result in adjacent and non-descriptive links.

I’ll explore both options in detail and discuss how to resolve accessibility concerns with a little help from TWIG. (Spoiler: TWIG gives us the best markup without any additional modules)

Using Core

Without adding contributed modules or code, Drupal Core gives you a long, rich text field type for storing lengthy content and a summary of that content. It’s a single field that acts like two. In the UI, this field type is called Text (formatted, long, with summary), and it does exactly what the name describes.

Drupal installs two Content Types that use this field type for the Body – Article and Basic Page – but it can be added to any Content Type that needs both a full and a summarized display.

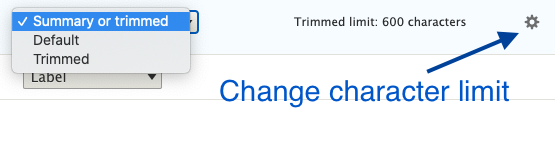

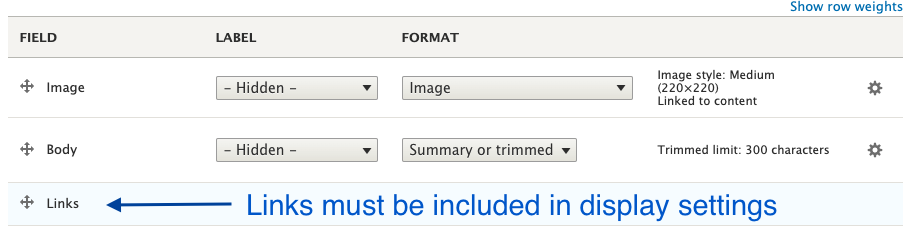

In the Manage Display settings of the Teaser or other summarized view mode, the Summary or Trimmed format is available. The default character limit is 600, but this can be configured higher or lower.

Finally, in the Manage Display settings, the Links field must be added to render the Read More link.

That’s all you need to create a Read More Teaser in just a few minutes with Drupal Core, but this method has some shortcomings.

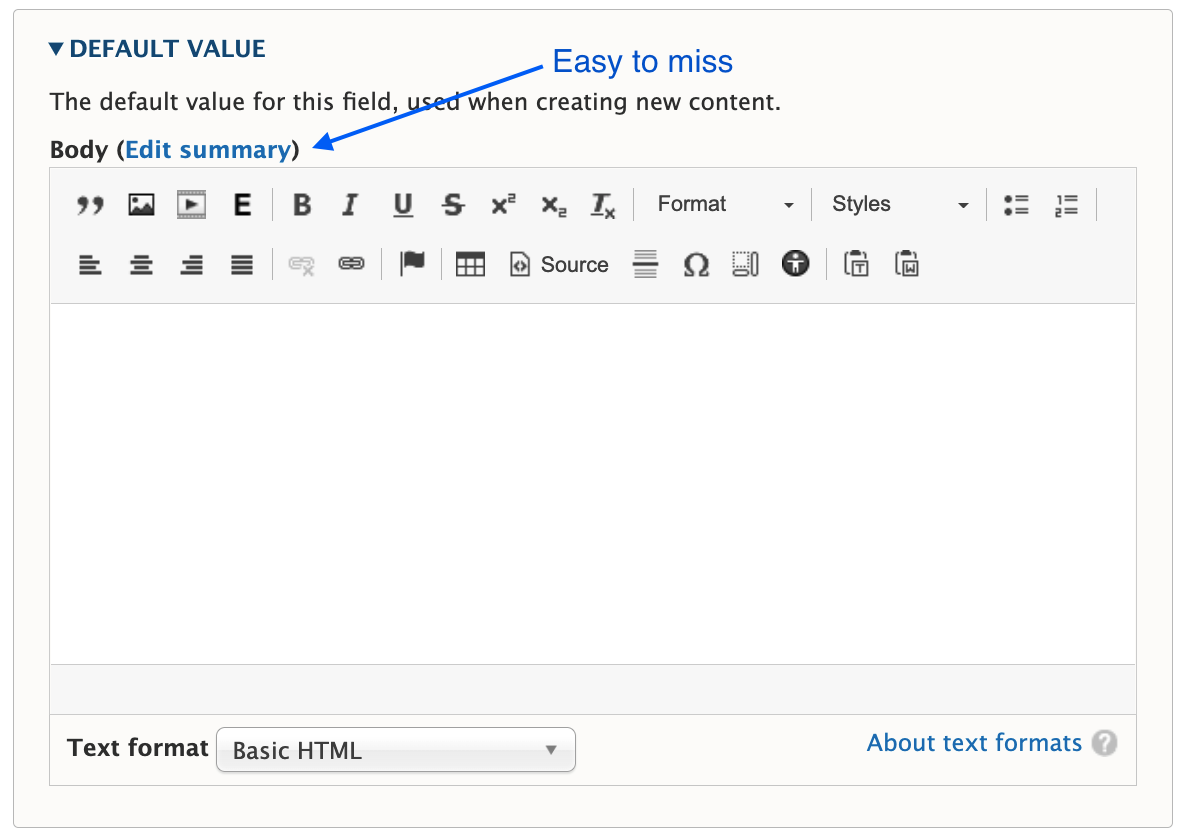

The summary field is easy to miss

Unless you require the summary, it’s often overlooked during content creation. A user has to click the Edit summary link to add a summary, and it’s easy to miss or forget.

You have to choose one or the other format

Notice the word “or” in the Summary or Trimmed format. Out of the gate, you can display what’s in the summary field, in its entirety, or you can display a trimmed version of the Body field. But you can’t display a trimmed version of the summary.

Expect the unexpected when a summary isn’t present

If a summary is not populated, Drupal attempts to trim the Body field to the last full sentence, while staying under the character limit. The problem is the unpredictable nature of content in a rich text field. These may be edge cases, but I’ve seen content editors insert images, headings, dates, and links as the first few elements in the Body field. When the content starts with something other than paragraph text, the Teaser display will, too.

Expect varying length Teasers when a summary is present

Depending on your layout or visual preference, this may not be a concern. But unless you’re judicious about using a standard or similar length for every summary, you’ll have varying length Teasers, which looks awkward when they are displayed side by side.

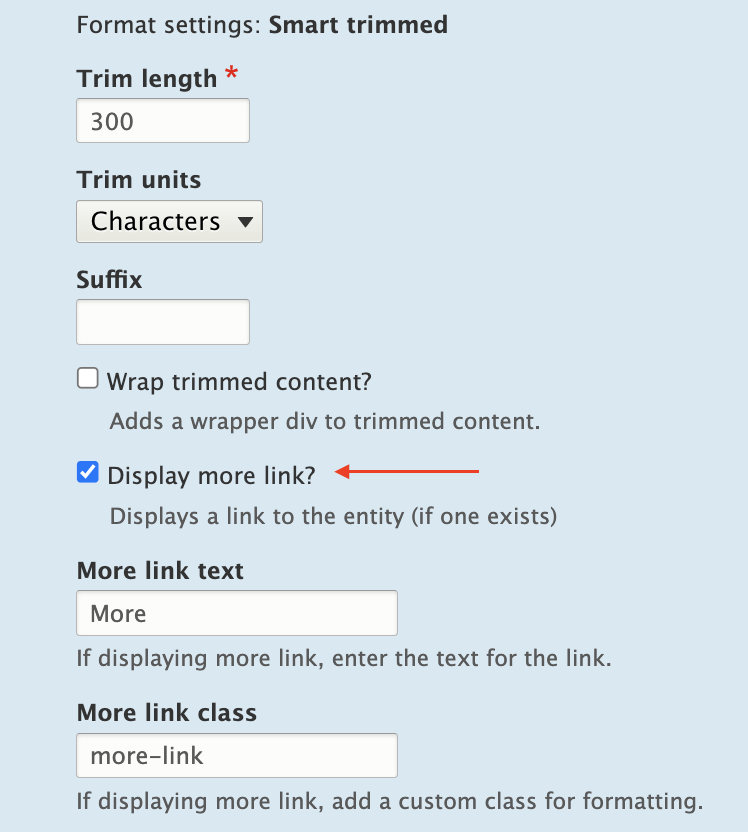

Using the Smart Trim Module

The contributed Smart Trim module expands on Core’s functionality with additional settings to “smartly” trim the field it’s applied to. The Smart Trim module’s description states:

With smart trim, you have control over:

- The trim length

- Whether the trim length is measured in characters or words

- Appending an optional suffix at the trim point

- Displaying an optional “More” link immediately after the trimmed text

- Stripping out HTML tags from the field

I won’t cover all of the features of Smart Trim, because it’s well documented already. Smart Trim solves one of the problems noted earlier: it lets you trim the summary. That said, Smart Trim has some of the same drawbacks as Core’s trim feature, along with some limitations of its own.

Sentences aren’t respected

Unlike Core’s trim feature, Smart Trim’s limits don’t look for full sentences. A character or word limitation stops when it hits the defined limit, even if that limit occurs mid-sentence.

Strip HTML might be helpful, or it might not

If your goal is rendering plain text without any HTML (something you might otherwise have to code in a template), Smart Trim’s Strip HTML setting is exactly what you need.

It’s also helpful for dealing with some of those edge cases where content starts with an element other than paragraph text. For example, if the first element in the Body field is an image, followed by paragraph text, the Strip HTML setting works nicely. It strips the image entirely and displays the text that follows in a trimmed format.

But be aware that Strip HTML also strips paragraph tags. If the first element in the Body field is a heading, followed by paragraph text, all tags are stripped, and everything runs together as one large block of text.

“Immediately after” doesn’t mean immediately after

If you want the Read More link to display immediately after the trimmed text, as the module description suggests, you’ll need to assign a class in the Smart Trim settings with a display other than block.

What about adjacent link and accessibility issues?

If you create a Read More Teaser using either the Core or Smart Trim methods described here, there are a couple of problems.

Either method results in markup something like this:

HTML

<article>

<h2>

<a href="/article/how-bake-cake">How to Bake a Cake</a>

</h2>

<div class="node__content">

<div class="node__summary">

<p>Practical tips on cake baking that you can't find anywhere else</p>

</div>

<div class="node__links">

<ul class="links inline">

<li class="node__readmore">

<a href="/article/how-bake-cake" title="How to Bake a Cake">Read more</a>

</li>

</ul>

</div>

</div>

</article>

The <a> inside the <h2> contains descriptive text, which is necessary according to Google’s Link text recommendations and Mozilla’s Accessibility guidelines for link text.

But the Read More link has text that is not descriptive. Furthermore, it navigates to the same resource as the first <a>, making it an adjacent or redundant link. Without any other intervention, this markup causes repetition for keyboard and assistive technology users.

How do you make it better?

The WCAG has a recommendation for handling adjacent image and text links. You can use this same logic and wrap the entire Teaser content with a single link. But for this, you need TWIG.

Here’s an example of how to structure a TWIG template to create the link-wrapped Teaser.

node–article–teaser.html.twig:

Twig

<article{{ attributes.addClass(classes) }}>

<div{{ content_attributes.addClass('node__content') }}>

<a href={{ url }}>

<div class="wrapper">

<h2{{ title_attributes.addClass('node__title') }}>

{{ label }}

</h2>

{{ content.body }}

</div>

</a>

</div>

</article>

But while this solves the adjacent link issue, it creates another problem. When you combine this template with the Manage Display settings that insert Read More links, Drupal creates many, many duplicate links.

Instead of wrapping the Teaser content in a single anchor tag, anchors are inserted around every individual element (and sometimes around nothing). The markup is worse than before:

HTML

<div class="node__content">

<a href="/article/how-bake-cake"> </a>

<div class="wrapper">

<a href="/article/how-bake-cake">

<h2 class="node__title">How to Bake a Cake</h2>

</a>

<div class="field field--name--body field--type-text-with-summary">

<a href="/article/how-bake-cake">

<div class="field__label visually-hidden">Body</div>

</a>

<div class="field__item">

<a href="/article/how-bake-cake"></a>

<div class="trimmed">

<a href="/article/how-bake-cake">

<p>Practical tips on cake baking that you can’t find anywhere else</p>

</a>

<div class="more-link-x">

<a href="/article/how-bake-cake"></a>

<a href="/article/how-bake-cake" class="more-link-x" hreflang="en">More</a>

</div>

</div>

</div>

</div>

</div>

</div>

But if you remove Smart Trim’s Read more link or Core’s Links field, the Read More text is also gone.

HTML

<div class="node__content">

<a href="/article/how-bake-cake">

<div class="wrapper">

<h2 class="node__title">How to Bake a Cake</h2>

<div class="field field--name--body field--type-text-with-summary">

<div class="field__label visually-hidden">Body</div>

<div class="field__item">

<p>Practical tips on cake baking that you can’t find anywhere else</p>

</div>

</div>

</div>

</a>

</div>

Because we’ve already enlisted the help of TWIG to fix the adjacent link issue, it’s easy enough to also use TWIG to re-insert the words “read more.” This creates the appearance of a Read More link and wraps all of the Teaser contents in a single anchor tag.

Twig

<article{{ attributes.addClass(classes) }}>

<div{{ content_attributes.addClass('node__content') }}>

<a href={{ url }}>

<div class="wrapper">

<h2{{ title_attributes.addClass('node__title') }}>

{{ label }}

</h2>

{{ content.body }}

<span class="node__readmore">{{ 'Read More'|trans }}</span>

</div>

</a>

</div>

</article>

Resulting HTML:

HTML

<div class="node__content">

<a href="/article/how-bake-cake">

<div class="wrapper">

<h2 class="node__title">How to Bake a Cake</h2>

<div class="field field--name--body field--type-text-with-summary">

<div class="field__label visually-hidden">Body</div>

<div class="field__item">

<p>Practical tips on cake baking that you can’t find anywhere else</p>

</div>

</div>

<span class="node__readmore">Read more</span>

</div>

</a>

</div>

Do it all with TWIG

Of course, you can eliminate Core’s trimming feature, and the Smart Trim module, and do everything you need with TWIG.

Using the same TWIG template, the Body field’s value can be truncated at a particular character length, split into words and appended with “read more” text.

Something like the following should do the trick in most cases.

node–article–teaser.html.twig:

Twig

<article{{ attributes.addClass(classes) }}>

<div{{ content_attributes.addClass('node__content') }}>

<a href={{ url }}>

<div class="wrapper">

<h2{{ title_attributes.addClass('node__title') }}>

{{ label }}

</h2>

{{ node.body.0.value|striptags|slice(0, 175)|split(' ')|slice(0, -1)|join(' ') ~ '...' }}

<span class="node__readmore">{{ 'Read More'|trans }}</span>

</div>

</a>

</div>

</article>

Final Thoughts

Today’s internet doesn’t need a prompt on each Teaser or Card to indicate that there is, in fact, more to read. The Read More Teaser feels like a relic from bygone days when, perhaps, it wasn’t obvious that a Teaser was only a summary.

But until we, the creators of websites, collectively decide to abandon the Read More Teaser pattern, developers will have to keep building them. And we should make them as accessible as we can in the process.

Although Drupal provides simple methods to implement this pattern, it’s the templating system that really shines here and solves the accessibility problems.

Are you a developer looking for a new opportunity? Join our team.

Is your digital platform still on Drupal 7? By now, hopefully, you’ve heard that this revered content management system is approaching its end of life. In November 2023, official Drupal 7 support from the Drupal community will end, including bug fixes, critical security updates, and other enhancements.

If you’re not already planning for a transition from Drupal 7, it’s time to start.

With nearly 12 million websites currently hacked or infected, Drupal 7’s end of life carries significant security implications for your platform. In addition, any plug-ins or modules that power your site won’t be supported, and site maintenance will depend entirely on your internal resources.

Let’s take a brief look at your options for transitioning off Drupal 7, along with five crucial planning considerations.

What Are Your Options?

In a nutshell, you have two choices: upgrade to Drupal 9, or migrate to a completely new CMS.

With Drupal 9’s advanced features and functionalities, migrating from Drupal 7 to 9 involves much more than applying an update to your existing platform. You’ll need to migrate all of your data to a brand-new Drupal 9 site, with a whole new theme system and platform requirements.

Drupal 9 also requires a different developer skill set. If your developers have been on Drupal 7 for a long time, you’ll need to factor a learning curve into your schedule and budget, not only for the migration but also for ongoing development work and maintenance after the upgrade.

As an alternative, you could take the opportunity to build a new platform with a completely different CMS. This might make sense if you’ve experienced a lot of pain points with Drupal 7, although it’s worth investigating whether those problems have been addressed in Drupal 9.

Drupal 10

What of Drupal 10, you ask? Drupal 10 is slated to be released in December 2022, but it will take some time for community contributed modules to be updated to support the new version. Once ready, updating from Drupal 9 to Drupal 10 should be a breeze.

Preparing for the Transition

Whether you decide to migrate to Drupal 9 or a new CMS, your planning should include the following five elements:

Content Audit

Do a thorough content inventory and audit, examine user analytics, and revisit your content strategy, so you can identify which content is adding real value to your business (and which isn’t).

Some questions to ask:

- Does your current content support your strategy and business goals?

- How much of the content is still relevant for your platform?

- Does the content effectively engage your target audience?

Another thing to consider is your overall content architecture: Is it a Frankenstein that needs refinement or revision? Hold that thought; in just a bit, we’ll cover some factors in choosing a CMS.

Design Evaluation

As digital experiences have evolved, so have user expectations. Chances are, your Drupal 7 site is starting to show its age. Be sure to budget time for design effort, even if you would prefer to keep your current design.

Drupal 7 site themes can’t be moved to a new CMS, or a different version of Drupal, without a lot of development work. Even if you want to keep the existing design, you’ll have to retheme the entire site, because you can’t apply your old theme to a new backend.

You’ll also want to consider the impact of a new design and architecture on your existing content, as there’s a good chance that even using an existing theme will require some content development.

Integrations

What integrations does your current site have, or need? How relevant and secure are your existing features and modules? Be sure to identify any modules that have been customized or are not yet compatible with Drupal 9, as they’ll likely require development work if you want to keep them.

Are your current vendors working well for you, or is it time to try something new? There are more microservices available than ever, offering specialized services that provide immense value with low development costs. Plus, a lot of functionalities from contributed modules in D7 are now a part of the D9 core.

CMS Selection & Architecture

Building the next iteration of your platform requires more than just CMS selection. It’s about defining an architecture that balances cost with flexibility. Did your Drupal 7 site feel rigid and inflexible? What features do you wish you had – more layout flexibility, different integrations, more workflow tools, better reporting, a faster user interface?

For content being brought forward, will migrating be difficult? Can it be automated? Should it be? If you’re not upgrading to Drupal 9, you could take the opportunity to switch to a headless CMS product, where the content repository is independent of the platform’s front end. In that case, you’d likely be paying for a product with monthly fees but no need for maintenance. On the flipside, many headless CMS platforms (like Contentful, for example) don’t allow for customization.

Budget Considerations

There are three major areas to consider in planning your budget: hosting costs, feature enhancements, and ongoing maintenance and support. All of these are affected by the size and scope of your migration and the nature of your internal development team.

Key things to consider:

- Will the newly proposed architecture be supported by existing hosting? Will you need one vendor or several (if you’re using microservices)?

- If you take advantage of low or no-cost options for hosting, you could put the cost savings toward microservices for feature enhancements.

- Given Drupal 9’s platform requirements, you’ll need to factor in the development costs of upgrading your platform infrastructure.