THE BRIEF

Connecting People and Planet

NEEF’s website is the gateway that connects its audiences to a vast array of learning experiences – but its existing platform was falling short. The organization needed more visually interesting resources and content, but it also knew its legacy Drupal site couldn’t keep up.

NEEF wanted to build a more powerful platform that could seamlessly:

- Communicate its mission and showcase its impact to inspire potential funders

- Broaden its audience reach through enhanced accessibility, content, and SEO

- Be a valuable resource by providing useful and engaging content, maps, toolkits, and online courses

- Build relationships by engaging users on the front end with easy-to-use content, then seamlessly channeling that data into back-end functionality for user-based tracking

THE APPROACH

Strategy is the foundation for effective digital experiences and the intuitive designs they require. Oomph first honed in on NEEF’s key goals, then implemented a plan to meet them: leveraging existing features that work, adding critical front- and back-end capabilities, and packaging it all in an engaging, user-centric new website.

Information architecture is at the core of user experience (UX). We focused on organizing NEEF’s information to make it more accessible and appealing to its core audiences: educators, conservationists, nonprofits, and partners. Our designers then transformed that strategy into strategic wireframes and dynamic designs, all of which we developed into a custom Drupal site.

The New NEEF: User-Centered Design

A Custom Site To Fuel Connection

NEEF needed a digital platform as unique as its organization, which is why Oomph ultimately delivered a suite of custom components designed to accommodate a variety of content needs.

Engaging and thoughtful design

NEEF’s new user experience is simple and streamlined. Visual cues aid in wayfinding (all Explore pages follow the same hero structure, for example), while imagery, micro-interactions (such as hover effects) and a bold color palette draw the user in. The UX also emphasizes accessibility and inclusivity; the high contrast between the font colors and the background make the website more readable for people with visual impairments, while people with different skin tones can now see themselves represented in NEEF’s new library of 100 custom icons.

Topic-based browsing

From water conservation to climate change, visitors often come to the NEEF site to learn about a specific subject. We overhauled NEEF’s existing site map to include topic-based browsing, with pages that roll resources, storytelling, and NEEF’s impact into one cohesive package. Additional links in the footer also make it easier for specific audiences to find information, such as nonprofits seeking grants or teachers looking for educational materials.

NPLD-hosted resources and event locator

Oomph refreshed existing components and added new ones to support one of NEEF’s flagship programs, National Public Lands Day (NPLD). People interested in hosting an event could use the new components to easily set one up, have their own dashboard to manage, and add their event to NEEF’s event locator. Once the event has passed, it’s automatically unlisted from the locator — but archived so hosts can duplicate and relaunch the event in future years.

THE RESULTS

Protecting the Planet, One User at a Time

Oomph helped NEEF launch its beautiful, engaging, and interactive site in May 2023. Within three months, NEEF’s team had built more than 100 new landing pages using the new component library, furthering its goal to build deeper connections with its audiences.

As NEEF’s digital presence continues to grow, so will its impact — all with the new custom site as its foundation.

More than two years after Google announced the launch of its powerful new website analytics platform, Google Analytics 4 (GA4), the final countdown to make the switch is on.

GA4 will officially replace Google’s previous analytics platform, Universal Analytics (UA), on July 1, 2023. It’s the first major analytics update from Google since 2012 — and it’s a big deal. As we discussed in a blog post last year, GA4 uses big data and machine learning to provide a next-generation approach to measurement, including:

- Unifying data across multiple websites and apps

- A new focus on events vs. sessions

- Cookieless user tracking

- More personalized and predictive analytics

At Oomph, we’ve learned a thing or two about making the transition seamless while handling GA4 migrations for our clients – including a few platform “gotchas” that are definitely better to know in advance. Before you start your migration, do yourself a favor and explore our GA4 setup guide.

Your 12-Step GA4 Migration Checklist

Step 1: Create a GA4 Analytics Property and Implement Tagging

The Gist: Launch the GA4 setup assistant to create a new GA4 property for your site or app. For sites that already have UA installed, Google is beginning to create GA4 properties automatically for them beginning in March 2023 (unless you opt out). If you’re migrating from UA, you can connect your UA property to your GA4 property to use the existing Google tracking tag on your site. For new sites, you’ll need to add the tag directly to your site or via Google Tag Manager.

The Gotcha: During property setup, Google will ask you which data streams you’d like to add (websites, apps, etc…). This is simple if you’re just tracking one site, but gets more complex for organizations with multiple properties, like educational institutions or retailers with individual locations. While UA allowed you to separate data streams by geography or line of business, GA4 handles this differently. This Google guide can help you choose the ideal configuration for your business model.

Step 2: Update Your Data Retention Settings

The Gist: GA4 lets you control how long you retain data on users and events before it’s automatically deleted from Google’s servers. For user-level data, including conversions, you can hang on to data for up to 14 months. For other event data, you have the option to retain the information for 2 months or 14 months.

The Gotcha: The data retention limits are much shorter than UA, which allowed you to keep Google-signals data for up to 26 months in some cases. The default retention setting in GA4 is 2 months for some types of data – a surprisingly short window, in our opinion – so be sure to extend it to avoid data loss.

Step 3: Initialize BigQuery

The Gist: Have a lot of data to analyze? GA4 integrates with BigQuery, Google’s cloud-based data warehouse, so you can store historical data and run analyses on massive datasets. Google walks you through the steps here.

The Gotcha: Since GA4 has tight time limits on data retention as well as data limits on reporting , skipping this step could compromise your reporting. BigQuery is a helpful workaround for storing, analyzing and visualizing large amounts of complex data.

Step 4: Configure Enhanced Measurements

The Gist: GA4 measures much more than pageviews – you can now track actions like outbound link clicks, scrolls, and engagements with YouTube videos automatically through the platform. When you set up GA4, simply check the box for any metrics you want GA4 to monitor. You can still use Google tags to customize tracking for other types of events or use Google’s Measurement Protocol for advanced tracking.

The Gotcha: If you were previously measuring events through Google tags that GA4 will now measure automatically, take the time to review which ones to keep to avoid duplicating efforts. It may be simpler to use GA4 tracking – giving you a good reason to do that Google Tag Manager cleanup you’ve been meaning to get to.

Step 5: Configure Internal and Developer Traffic Settings

The Gist: To avoid having employees or IT teams cloud your insights, set up filters for internal and developer traffic. You can create up to 10 filters per property.

The Gotcha: Setting up filters for these users is only the first step – you’ll also need to toggle the filter to “Active” for it to take effect (a step that didn’t exist in UA). Make sure to turn yours on for accurate reporting.

Step 6: Migrate Users

The Gist: If you were previously using UA, you’ll need to migrate your users and their permission settings to GA4. Google has a step-by-step guide for migrating users.

The Gotcha: Migrating users is a little more complex than just clicking a button. You’ll need to install the GA4 Migrator from Google Analytics add-on, then decide how to migrate each user from UA. You also have the option to add users manually.

Step 7: Migrate Custom Events

The Gist: Event tracking has fundamentally changed in GA4. While UA offered three default parameters for events (eventcategory, action, and eventlabel), GA4 lets you create any custom conventions you’d like. With more options at your fingertips, it’s a great opportunity to think through your overall measurement approach and which data is truly useful for your business intelligence.

When mapping UA events to GA4, look first to see if GA4 is collecting the data as an enhanced measurement, automatically collected, or recommended event. If not, you can create your own custom event using custom definitions. Google has the details for mapping events.

The Gotcha: Don’t go overboard creating custom definitions – GA4 limits you to 50 per property.

Step 8: Migrate Custom Filters to Insights

The Gist: Custom filters in UA have become Insights in GA4. The platform offers two types of insights: automated insights based on unusual changes or emerging trends, and custom insights based on conditions that matter to you. As you implement GA4, you can set up custom insights for Google to display on your Insights dashboard. Google will also email alerts upon request.

The Gotcha: Similar to custom events, GA4 limits you to 50 custom insights per property.

Step 9: Migrate Your Segments

The Gist: Segments work differently in GA4 than they do in UA. In GA4, you’ll only find segments in Explorations. The good news is you can now set up segments for events, allowing you to segment data based on user behavior as well as more traditional segments like user geography or demographics.

The Gotcha: Each Exploration has a limit of 10 segments. If you’re using a lot of segments currently in UA, you’ll likely need to create individual reports to see data for each segment. While you can also create comparisons in reports for data subsets, those are even more limited at just four comparisons per report.

Step 10: Migrate Your Audiences

The Gist: Just like UA, GA4 allows you to set up audiences to explore trends among specific user groups. To migrate your audiences from one platform to another, you’ll need to manually create each audience in GA4.

The Gotcha: You can create a maximum of 100 audiences for each GA4 property (starting to sense a theme here?). Also, keep in mind that GA4 audiences don’t apply retroactively. While Google will provide information on users in the last 30 days who meet your audience criteria — for example, visitors from California who donated more than $100 — it won’t apply the audience filter to users earlier than that.

Step 11: Migrate Goals to Conversion Events

The Gist: If you were previously tracking goals in UA, you’ll need to migrate them over to GA4, where they’re now called conversion events. GA4 has a goals migration tool that makes this process pretty simple.

The Gotcha: GA4 limits you to 30 custom conversion events per property. If you’re in e-commerce or another industry with complex marketing needs, those 30 conversion events will add up very quickly. With GA4, it will be important to review conversion events regularly and retire ones that aren’t relevant anymore, like conversions for previous campaigns.

Step 12: Migrate Alerts

The Gist: Using custom alerts in UA? As we covered in Step 8, you can now set up custom insights to keep tabs on key changes in user activity. GA4 will deliver alerts through your Insights dashboard or email, based on your preferences.

The Gotcha: This one is actually more of a bonus – GA4 will now evaluate your data hourly, so you can learn about and respond to changes more quickly.

The Future of Measurement Is Here

GA4 is already transforming how brands think about measurement and user insights – and it’s only the beginning. While Google has been tight-lipped about the GA4 roadmap, we can likely expect even more enhancements and capabilities in the not-too-distant future. The sooner you make the transition to GA4, the sooner you’ll have access to a new level of intelligence to shape your digital roadmap and business decisions.

Need a hand getting started? We’re here to help – reach out to book a chat with us.

The circular economy aims to help the environment by reducing waste, mainly by keeping goods and services in circulation for as long as possible. Unlike the traditional linear economy, in which things are produced, consumed, and then discarded, a circular economy ensures that resources are shared, repaired, reused, and recycled, over and over.

What does this have to do with your digital platform? In a nutshell: everything.

From tackling climate change to creating more resilient markets, the circular economy is a systems-level solution for global environmental and economic issues. By building digital platforms for the circular economy, your business will be better prepared for whatever the future brings.

The Circular Economy isn’t Coming. It’s Here.

With environmental challenges growing day by day, businesses all over the world are going circular. Here are a few examples:

- Target plans for 100% of its branded products to last longer, be easier to repair or recycle, and be made from materials that are regenerative, recyclable, or sustainably sourced.

- Trove’s ecommerce platform lets companies buy back and resell their own products. This extends each products’ use cycle, lowering the environmental and social cost per item.

- Renault is increasing the life of its vehicle parts by restoring old engine parts. This limits waste, prolongs the life of older cars, and reduces emissions from manufacturing.

One area where nearly every business could adopt a circular model is the creation and use of digital platforms. The process of building websites and apps, along with their use over time, consumes precious resources (both people and energy). That’s why Oomph joined 1% For the Planet earlier this year. Our membership reflects our commitment to do more collective good — and to hold ourselves accountable for our collective impact on the environment.

But, we’re not just donating profits to environmental causes. We’re helping companies build sustainable digital platforms for the circular economy.

Curious about your platform’s environmental impact? Enter your URL into this tool to get an estimate of your digital platform’s carbon footprint.

Changing Your Platform From Linear to Circular

If protecting the environment and promoting sustainability is a priority for your business, it’s time to change the way you build and operate your websites and apps. Here’s what switching to a platform for the circular economy could look like.

From a linear mindset…

When building new sites or apps, many companies fail to focus on longevity or performance. Within just a few years, their platforms become obsolete, either as a result of business changes or a desire to keep up with rapidly evolving technologies.

So, every few years, they have to start all over again — with all the associated resource costs of building a new platform and migrating content from the old one.

Platforms that aren’t built with performance in mind tend to waste a ton of energy (and money) in their daily operation. As these platforms grow in complexity and slow down in performance, one unfortunate solution is to just increase computing power. That means you need new hardware to power the computing cycles, which leads to more e-waste, more mining for metals and more pollution from manufacturing, and more electricity to power the entire supply chain.

Enter the circular economy.

…to a circular approach.

Building a platform for the circular economy is about reducing harmful impacts and wasteful resource use, and increasing the longevity of systems and components. There are three main areas you can address:

1. Design out waste and pollution from the start.

At Oomph, we begin every project with a thorough and thoughtful discovery process that gets to the heart of what we’re building, and why. By identifying what your business truly needs in a platform — today and potentially tomorrow — you’ll minimize the need to rebuild again later.

It’s also crucial to build efficiencies into your backend code. Clean, efficient code makes things load faster and run more quickly, with fewer energy cycles required per output.

Look for existing frameworks, tools, and third-party services that provide the functions you need and will continue to stay in service for years or decades to come. And, instead of building a monolith platform that has to be upgraded every few years or requires massive computing power, consider switching to a more nimble and efficient microservices architecture.

2. Keep products and services in use.

Regular maintenance and timely patching is key to prolonging the life of your platform. So is proactively looking for performance issues. Be sure to regularly test and assess your platform’s speed and efficiency, so you can address problems early on.

While we’re advocating for using products and services for as long as possible, if your platform is built on microservices, don’t be afraid to replace an existing service with a new one. Just make sure the new service provides a benefit that outweighs the resource costs of implementing it.

3. Aim to regenerate natural systems.

The term “regenerate” describes a process that mimics the cycles of nature by restoring or renewing sources of energy and materials. It might seem like the natural world is far removed from your in-house tech, but there are a number of ways that your IT choices impact the environment.

For starters, you can factor sustainability into your decisions around vendors and equipment. Look for digital hosting companies and data centers that are green or LEED-certified. Power your hardware with renewable energy sources. Ultimately, the goal is to consider not just how to reduce your platform’s impact on the environment, but how you can create a net-positive effect by doing better with less.

Get Ready for the Future

We’ve long seen that the ways in which businesses and societies use resources can transform local and global communities. And we know that environmental quality is inextricably linked to human wellbeing and prosperity. The circular economy, then, provides a way to improve our future readiness.

Companies that invest in sustainability generally experience better resilience, improved operational performance, and longer-lasting growth. They’re also better suited to meet the new business landscape, as governments incentivize sustainable activities, customers prefer sustainable products, and employees demand sustainable leadership.

Interested in exploring how you can join the new circular economy with your digital platforms? We’d love to help you explore your options, just contact us.

In our previous post we broadly discussed the mindset of composable business. While “composable” can be a long term company-wide strategy for the future, companies shouldn’t overlook smaller-scale opportunities that exist at every level to introduce more flexibility, longevity, and reduce costs of technology investments.

For maximum ROI, think big, then start small

Many organizations are daunted by the concept of shifting a legacy application or monolith to a microservices architecture. This is exacerbated when an application is nearing end of life.

Don’t discount the fact that a move to a microservices architecture can be done progressively over time, unlike the replatform of a monolith which is a huge investment in both time and money that may not be realized for years until the new application is ready to deploy.

A progressive approach allows organizations to:

- Move faster and allow for adjustments as needed

- Begin realizing returns on investments faster

- Reduce risk by making smaller investments and deployments

- Ease budgeting process by funding an overhaul in stages

- Improve quality by minimizing the scope of tests

- Save money on initial investment and maintenance where services are centralized

- Benefit from longevity of a component-based system

Prioritizing the approach by aligning technical architecture with business objectives

As with any application development initiative, aligning business objectives with technology decisions is essential. Unlike replatforming a monolith, however, prioritizing and planning the order of development and deployments is crucial to the success of the initiative.

Start with clearly defining your application with a requirements and feature matrix. Then evaluate each using three lenses to see priorities begin to emerge:

- With a current state lens, evaluate each item. Is it broken? Is it costly to maintain? Is it leveraged by multiple business units or external applications?

- Then with a future state lens, evaluate each item. Could it be significantly improved? Could it be leveraged by other business units? Could it be leveraged outside the organization (partners, etc…)? Could it be leveraged in other applications, devices, or locations?

- Lastly, evaluate the emerging priority items with a cost and effort lense. What is the level of effort to develop the feature as a service? What is the likely duration of the effort?

Key considerations when planning a progressive approach

Planning is critical to any successful application development initiative, and architecting a microservices based architecture is no different. Be sure to consider the following key items as part of your planning exercises:

- Remember that rearchitecting a monolith feature as a service can open the door to new opportunities and new ways of thinking. It is helpful to ask “If this feature was a stand alone service, we could __”

- Be careful of designing services that are too big in scope. Work diligently to break down the application into the smallest possible parts, even if it is later determined that some should be grouped together

- Keep security front of mind. Where a monolith may have allowed for a straightforward security management policy with everything under one roof, a services architecture provides the opportunity for a more customized security policy, and the need to define how separate services are allowed to communicate with each other and the outside world

In summary

A microservices architecture is an approach that can help organizations move faster, be more flexible and agile, and reduce costs on development and maintenance of software applications. By taking a progressive approach when architecting a monolith application, businesses can move quickly, reduce risk, improve quality, and reduce costs.

If you’re interested in introducing composability to your organization, we’d love to help! Contact us today to talk about your options.

Many organizations today, large and small, have a digital asset problem. Companies are amassing huge libraries of images, videos, audio recordings, documents, and other files — while relying on shared folders and email to move them around the organization. As asset libraries explode, digital asset management (DAM) is crucial for keeping things accessible and up to date, so teams can spend more time getting work done and less time hunting for files.

First Things First: DAM isn’t Dropbox

Some folks still equate DAM with basic digital storage solutions, like Dropbox or Google Drive. While those are great for simple sharing needs, they’re essentially just file cabinets in the cloud.

DAM technology is purpose-built to optimize the way you store, maintain, and distribute digital assets. A DAM platform not only streamlines day-to-day content work; it also systematizes the processes and guidelines that govern content quality and use.

Today’s DAMs have sophisticated functionality that offers a host of benefits, including:

- Providing efficient access for internal and external teams

- Streamlining workflows for sharing drafts and getting approvals

- Serving images in multiple sizes and formats, reducing duplication

- Enabling AI-powered categorization, tagging, and license tracking

- Preventing versioning and legal issues around asset use

Is it time for your business to invest in a DAM? Let’s see if you recognize the pain points below:

The 5 Signs You Need a DAM

There are some things you can’t afford not to invest in if they significantly impact your team’s creativity and productivity and your business’s bottom line. Here are some of the most common signs it’s time to invest in a DAM:

It takes more than a few seconds to find what you need.

As your digital asset library grows, it’s harder to keep sifting through it all to find things — especially if you’re deciphering other people’s folder systems. If you don’t know the exact name of an asset or the folder it’s in, you’re often looking for a needle in a haystack.

Using a DAM, you can tag assets with identifying attributes (titles, keywords, etc.) and then quickly search the entire database for the ones that meet your criteria. DAMs also offer AI- and machine-learning–based tagging, which automatically adds tags based on the content of an image or document. Voila! A searchable database with less manual labor.

You have multiple versions of documents — in multiple places.

Many of our clients, including universities, healthcare systems, libraries, and nonprofits, have large collections of policy documents. These files often live on public websites, intranets, and elsewhere, with the intent that staff can pull them up as needed.

Problem is, if there’s a policy change, you need to be sure that anywhere a document is accessed, it’s the most current version. And you can’t just delete old files on a website, because any previous links to them will go up in smoke.

DAMs are excellent at managing document updates and variations, making it easy to find and replace old versions. They can also perform in-place file swaps without breaking the connections to the pieces of content that refer to a particular file.

You’re still managing assets by email.

With multiple team members or departments relying on the same pool of digital assets for a variety of use cases, some poor souls will spend hours every day answering email requests, managing edits, and transferring files. The more assets and channels you’re dealing with, the more unwieldy this gets.

DAMs facilitate collaboration by providing a single, centralized platform where team members can assign tasks, track changes, and configure permissions and approval processes. As a result, content creators know they’re using the most up-to-date, fully approved assets.

Your website doubles as a dump bin.

If your website is the source of assets for your entire organization, it can be a roadblock for other departments that need to use those assets in other places. They need to know how to find assets, download copies, and obtain sizes or formats that differ from the web-based versions… and there may or may not be a web team to assist.

What’s more, some web hosting providers offer limited storage space. If you have a large and growing digital library, you’ll hit those limits in no time.

A DAM provides a high-capacity, centralized location where staff can easily access current, approved digital assets in various sizes and formats.

You’re duplicating assets you already have.

How many times have you had different teams purchase assets like stock photography and audio tracks, when they could have shared the files instead? Or, maybe your storage folders are overrun with duplicates. Instead of relying on teams to communicate whenever they create or use an asset, you could simplify things with a DAM.

Storing and tagging all your assets, in various sizes and formats, in a DAM enables your teams to:

- Make the most of the assets you own

- Avoid creating unnecessary copies

- Access optimized versions for different applications

- Keep track of how many times each asset is used

When Should You Implement a DAM?

You can implement a DAM whether you have an existing website or you’re building a new one. DAM technology easily complements platform builds or redesigns, helping to make websites and intranets even more powerful. Organizing all of your assets in a DAM before launching a web project also makes it easier to migrate them to your new platform and helps ensure that nothing gets lost.

Plus, we’ve seen companies cling to old websites when too many departments are still using assets that are hosted on the site. Moving your assets out of your website and into a DAM frees you up to move on.

If you’re curious about your options for a DAM platform, there are a number of solutions on the market. Our partner Acquia offers an excellent DAM platform with an impressive range of functions for organizing, accessing, publishing, and repurposing assets, automating manual processes, and monitoring content metrics.

Other candidates to consider include Adobe Experience Manager Assets, Bynder, PicturePark, Canto, Cloudinary, Brandfolder, and MediaValet.

Given the number of DAMs on the market, choosing the right solution is a process. We’re happy to share our experience in DAM use and implementation, to help you find the best one for your needs. Just get in touch with any questions you have.

With all the hype swirling around technology buzzwords like blockchain, cryptocurrencies, and non-fungible tokens (NFTs), it can be hard to understand their real utility for your business. But as practical applications continue to emerge, more business leaders are starting to see adopting blockchain as a business priority (PDF) — and, potentially, a competitive advantage.

To help you make sense of it, we’ve compiled a high-level look at blockchain technology and its sister concept, Web3, along with some ideas for how this technology could be relevant to your business right now.

First, What are Blockchain & Web3?

Web3

“Web3” has become a catch-all term that refers to a decentralized online ecosystem where platforms and apps are owned not by a central gatekeeper, but by the users who help develop and maintain those services. To many, Web3 is the next iteration of the internet.

The first version of the internet, Web 1.0, was largely made of individual, static web pages created by the few people who understood the technology. We are currently in the midst of Web 2.0, which provides a platform with tools for non-technical people to create their own content, essentially democratizing authorship. With it, we saw the rise of social media platforms, shopping giants like Amazon, and work tools like Office and Google Suite. This also meant that much of our individual data — our posts, reviews, and photos — have been centralized into these behemoth systems.

The promise of Web3 is the opposite approach: decentralized content, with much greater control over what you create and in what ways your data is associated with your activity.

Blockchain

Web3 achieves its goals of decentralization via blockchain, a digital ledger that exists only on the internet. This ledger uses a complex cryptography system to create encrypted “blocks” of data, ensuring that all the transactions that are written to it are verifiable and unalterable.

This ledger is open to the world to access. It’s not hosted on a single server owned by one company, but instead across a vast network of computers. The technology keeps all transactions up to date everywhere at once, and maintenance fees are paid by those that access the data.

What makes the blockchain so valuable across sectors is that it helps reduce risk, eliminates fraud, and provides scalable transparency. As a chronological, decentralized, single source of truth, the blockchain creates trust in data. As an example, at its most basic level, this open ledger makes it possible for me to verify that you conducted a certain transaction on a certain date (like a digital receipt). But it can do much more than that.

Let’s take a look at some of the ways you can put the blockchain to use.

What Can Blockchain Do for Healthcare?

We’ve already started to see innovation around Web3 in the healthcare space, much of it focused on patient health records. Given the increasing fragmentation of healthcare, the strict privacy regulations in this space, and the high risk of data breaches with current systems, the healthcare industry is a good candidate for blockchain use and the security benefits of decentralization.

Use case: patient records

To imagine how the blockchain can change healthcare, let’s consider a typical patient who sees their general practitioner and requests a visit with a specialist. To ensure continuity of care, the patient’s health records must be sent from the primary doctor to the specialist’s office quickly and securely. Since most patients don’t have copies of all their medical records, they’re relying on their doctor’s office to transfer this sensitive data.

With the blockchain, patients can own their own medical records and control who has access to them. Doctors can add detailed entries to the digital ledger, which can be shared with other medical professionals as needed. Patients can also revoke access to anyone at any time.

Improving access to patient information across providers is crucial for the healthcare industry, given that medical errors are the third leading cause of death in the U.S. Current medications and their side effects could be part of the blockchain ledger, to help reduce complications. In addition, smart contracts that automatically seek out potential conflicts between medications could be added to medical records.

This example barely scratches the surface; luckily, there are many companies already in this space, figuring out how to store patient data via blockchain in accordance with current regulations.

What Can Blockchain Do for Education?

You may not immediately think of educational applications as benefitting from blockchain technology. But paper records are one area that’s ripe for disruption, since moving to a digital format would make communication between institutions much more efficient. Here’s an example.

Use case: student transcripts

Having transcripts stored on a blockchain would make it easier for students to transfer their educational history from one school to another. It would also ensure that educational institutions, or even employers, could easily verify that history. In fact, MIT has been issuing digital, blockchain-stored diplomas since 2017.

Beyond transcripts, the Open Badge Passport issues digital badges that recognize learning, skills, and achievements by scraping information off the blockchain about individuals’ extracurricular activities. This allows students and others to demonstrate soft-skill talents that are valuable to have but not typically recorded by a degree.

What Can Blockchain Do for Ecommerce?

Product registration has long been a way for companies to gain access to buyers’ contact info (more than a way for customers to protect their investment, as advertised). Using blockchain, product registries can serve a greater range of purposes, offering value to both consumers and companies. That’s because when it comes to provenance — the place of origin or earliest known history of something — a blockchain is a perfect public record-keeping tool.

Use case: product registries

For high-ticket items that can be sold on the internet, like jewelry, designer clothing, or rare books, a blockchain entry can be used to prove, and verifiably transfer, an item’s ownership. This user history could not only help bolster the secondary market for verified products, it also reduces the ability of counterfeiters to pass knock-off products as the real thing.

Imagine this approach for high-ticket items like autos. Supporting vehicle transactions that take place online or offline, a blockchain could store vehicle maintenance and crash reports. This trove of information could boost the resale value of a vehicle, because potential buyers can access the vehicle’s entire maintenance history. If the data is connected to onboard sensors, it might even include engine efficiency and tire wear.

What Can Blockchain Do for Non-Profits?

In this example, we combine blockchain, NFTs, and smart contracts to create a unique approach to a fundraising classic. Quick primer on NFTs: blockchain-based tokens that represent digital media like music, art, videos, etc… and can verify authenticity, past history, and sole ownership of a digital item. Smart contracts are preset functions that fire on a blockchain when specific conditions are met.

Use case: silent auctions with NFTs

Non-profits could take a new approach to fundraising with NFTs. Let’s say an artist creates (as a donation) a series of NFTs that are auctioned off to the highest bidder, with ownership of the artwork transferred via a blockchain. Or museums could sell digital representations of their collection, potentially fueling new derivative artwork. Artists could remix classic works into new art, providing additional promotional and fundraising opportunities. The classic silent auction gets upgraded.

But let’s take it a step further. Smart contracts on those NFTs could perpetually pay a royalty back to the original artist or the organization as a portion of any future sales of the artwork. That means one year’s fundraiser could potentially reap monetary benefits for many years to come. The contract could take any form negotiated by the artist and non-profit — future royalties from ownership transfer pay the artist while the initial artwork was a donation, or the artist and organization split future proceeds, etc…

Blockchain can not only help overcome challenges such as consumer privacy concerns and sensitive data management, it can also help organizations seize new opportunities for growth.

What Can Blockchain Do for You?

While blockchain technology was first conceived as a mechanism to support Bitcoin, today it offers tons of uses across industry sectors. This kind of advanced technology may seem only accessible to companies that can afford expensive developers, but the cost to incorporate blockchain technology into many business operations is often less than you think. Plus, new vendors are emerging all the time to provide blockchain technology for a broad range of applications.

Blockchain can not only help overcome challenges such as consumer privacy concerns and sensitive data management, it can also help organizations seize new opportunities for growth. Think about your company’s biggest challenge or goal, and there might be a way blockchain technology can address it.

Interested in looking at ways to incorporate blockchain into your company’s digital assets? Let’s talk.

When companies merge, successfully combining digital assets like websites, intranets, apps, and other platforms takes more than just squishing things together. Poorly merged digital properties can diminish brand equity, squander years of SEO value, and even drive away customers or employees — ultimately tanking the value the merger was supposed to create.

The challenge is that you’re bringing together two end-user communities with different experiences and expectations. And it’s easy to assume the bigger or faster-growing company has the better digital platform, even when there’s a lot you could learn from the smaller company’s practices.

That’s why we recommend a collaborative, UX-centered approach to combining digital properties, to ensure you’re leveraging the best of both worlds. In this article, we’ll share a sample of UX analyses that can help set up a new combined platform for success.

First, let’s talk about leveraging the right mindset.

A Different Approach

Typically, when combining digital platforms, companies tend to take a top-down approach, meaning there’s a hierarchy of decision-making based on which platform is believed to be better. But the calculus can change a lot when those decisions are made from the end users’ point of view instead.

From a practical standpoint, these companies are usually trying to create efficiencies and add new competencies while carefully messaging the benefits of the merger for their customers. They focus on things like branding, SEO, and consolidating social media — all of which are important, and none of which truly shapes the platform user’s experience.

To be fair, before the merger, both companies were likely focused on trying to create the best possible user experience for their customers. Now that they’re joining forces, each brings a unique set of learnings and techniques to the table. Which begs the question: what if your new partner handles some aspects of UX better than you?

Working collaboratively through in-depth Acquisition Analysis gives you an opportunity to extract the best from all digital properties, as either company’s platforms may have features, functionality, or content that does a better job of meeting business goals. How do you know which elements will be more successful? By auditing both platforms with tools like the ones we’ll talk about next.

When merging, don’t assume the bigger company should swallow the smaller and all its digital assets. There might be many things that the smaller company is doing better.

Conducting UX Audits

To preserve SEO value and cull the best-performing content for the new platform, many companies conduct content and SEO audits, often using free or paid tools. These usually involve flagging duplicate content, comparing performance metrics, and using R.O.T. (redundant/outdated/trivial) analyses.

What many organizations miss, however, is the opportunity to conduct UX and customer audits while directly comparing digital platforms. These can provide invaluable insights about the mental models and behaviors of users.

At a minimum, we recommend comparing both platforms using Nielsen Norman Group’s 10 usability heuristics. Setting the standard for user interface design for almost three decades, these guidelines give you a great baseline for identifying which parts of each platform are the most user-friendly. You can also compare heatmaps and scrollmaps to assess which platform does a better job of engaging users in ways that matter to your business goals.

Here are some other examples of UX analyses we conduct for clients when merging digital platforms:

Five second test

With existing customers or representatives of your target audience, ask users to view a page for five seconds and then answer a few questions about it. You’re looking for gut feelings here, as first impressions can tell you a lot about a page’s effectiveness.

Questions might include:

- What does the site tell you about the company’s personality?

- What’s something you think you could do on that website?

- Did anything stand out as new or surprising to you?

This test should be done for multiple pages on a website, not just the homepage. It’s especially valuable for product or service pages, where you can assess whether specific features are easily visible and accessible.

Customer interview comparison

For this assessment, enlist 5 to 10 customers for each business. Have the customers of Company A use Company B’s platform and vice versa, asking them to explain the value each company offers. You can also ask users what’s missing when they use the other company’s website. What’s different and better (or worse) than before? The answers can help you determine which brand and functional elements are essential to the user experience for each platform.

This test can also provide insights about the impact of elements you may not have previously considered, like the quality of photography or the order in which information is presented. These elements can set expectations and affect how people use the platform, all of which contributes to building users’ trust.

For a more in-depth analysis of user engagement and preferences, try gathering a combination of quantitative and qualitative data.

Site map analysis

Given that the merging companies are likely in the same or similar industries, there will probably be overlap between the site maps for each company’s website. But there will also be elements that are unique to each site. To successfully blend the information architecture of both properties, you’ll need to determine which elements work best for your target audience.

In addition to comparing analytics for the different websites to see which elements are most effective, here are a few other research methods we recommend:

Cohort analysis

Looking at other websites in your industry, examine their site structures and the language they use (e.g. “Find a doctor” vs. “Find a provider”). This reflects visitors’ expectations of what information they’ll get and where they can find it. You can also identify areas where you should deviate from the norm, including language that’s more authentic and unique to your brand.

Card sort

Card sorting helps you understand how to structure content in a way that makes sense to your users, so they can find what they’re looking for. Participants group labeled notecards according to criteria they would naturally use. For example, if you have a car rental site, you could ask users to organize different vehicle models into groups that make sense to them. While your company might use terms like “family car” or “executive sedan,” your customers might have completely different perceptions.

Tree testing

Tree testing helps you evaluate a proposed site structure by asking users to find items based solely on the menu structure and terminology. Using an online interface (Treejack is a popular one) that displays only navigation links without layout or design, users are asked to complete a series of 10–15 tasks. This can show you how easy it is for site visitors to find and use information. This test is often used after card sorting sessions to confirm that the findings from the card sorting exercise are correct.

Use Information, Not Intuition

Like we said, just because a larger company acquires a smaller one doesn’t mean its digital properties have nothing to learn from the other’s. Better practices could exist in either place, and it would be a shame to lose any unique value the smaller company’s platform might offer.

With so many robust tools available for UX analysis, there’s no reason not to gather the crucial data that will help you decide which features of each platform will best achieve your business goals. When combining digital properties, the “1 + 1 = 3” trope only works if you truly glean the best of both worlds.

Need help laying the groundwork for merging separate digital platforms? Our strategic UX experts can craft a set of research exercises to help your team make the best possible decisions. Contact us today to learn more.

Is your digital platform still on Drupal 7? By now, hopefully, you’ve heard that this revered content management system is approaching its end of life. In November 2023, official Drupal 7 support from the Drupal community will end, including bug fixes, critical security updates, and other enhancements.

If you’re not already planning for a transition from Drupal 7, it’s time to start.

With nearly 12 million websites currently hacked or infected, Drupal 7’s end of life carries significant security implications for your platform. In addition, any plug-ins or modules that power your site won’t be supported, and site maintenance will depend entirely on your internal resources.

Let’s take a brief look at your options for transitioning off Drupal 7, along with five crucial planning considerations.

What Are Your Options?

In a nutshell, you have two choices: upgrade to Drupal 9, or migrate to a completely new CMS.

With Drupal 9’s advanced features and functionalities, migrating from Drupal 7 to 9 involves much more than applying an update to your existing platform. You’ll need to migrate all of your data to a brand-new Drupal 9 site, with a whole new theme system and platform requirements.

Drupal 9 also requires a different developer skill set. If your developers have been on Drupal 7 for a long time, you’ll need to factor a learning curve into your schedule and budget, not only for the migration but also for ongoing development work and maintenance after the upgrade.

As an alternative, you could take the opportunity to build a new platform with a completely different CMS. This might make sense if you’ve experienced a lot of pain points with Drupal 7, although it’s worth investigating whether those problems have been addressed in Drupal 9.

Drupal 10

What of Drupal 10, you ask? Drupal 10 is slated to be released in December 2022, but it will take some time for community contributed modules to be updated to support the new version. Once ready, updating from Drupal 9 to Drupal 10 should be a breeze.

Preparing for the Transition

Whether you decide to migrate to Drupal 9 or a new CMS, your planning should include the following five elements:

Content Audit

Do a thorough content inventory and audit, examine user analytics, and revisit your content strategy, so you can identify which content is adding real value to your business (and which isn’t).

Some questions to ask:

- Does your current content support your strategy and business goals?

- How much of the content is still relevant for your platform?

- Does the content effectively engage your target audience?

Another thing to consider is your overall content architecture: Is it a Frankenstein that needs refinement or revision? Hold that thought; in just a bit, we’ll cover some factors in choosing a CMS.

Design Evaluation

As digital experiences have evolved, so have user expectations. Chances are, your Drupal 7 site is starting to show its age. Be sure to budget time for design effort, even if you would prefer to keep your current design.

Drupal 7 site themes can’t be moved to a new CMS, or a different version of Drupal, without a lot of development work. Even if you want to keep the existing design, you’ll have to retheme the entire site, because you can’t apply your old theme to a new backend.

You’ll also want to consider the impact of a new design and architecture on your existing content, as there’s a good chance that even using an existing theme will require some content development.

Integrations

What integrations does your current site have, or need? How relevant and secure are your existing features and modules? Be sure to identify any modules that have been customized or are not yet compatible with Drupal 9, as they’ll likely require development work if you want to keep them.

Are your current vendors working well for you, or is it time to try something new? There are more microservices available than ever, offering specialized services that provide immense value with low development costs. Plus, a lot of functionalities from contributed modules in D7 are now a part of the D9 core.

CMS Selection & Architecture

Building the next iteration of your platform requires more than just CMS selection. It’s about defining an architecture that balances cost with flexibility. Did your Drupal 7 site feel rigid and inflexible? What features do you wish you had – more layout flexibility, different integrations, more workflow tools, better reporting, a faster user interface?

For content being brought forward, will migrating be difficult? Can it be automated? Should it be? If you’re not upgrading to Drupal 9, you could take the opportunity to switch to a headless CMS product, where the content repository is independent of the platform’s front end. In that case, you’d likely be paying for a product with monthly fees but no need for maintenance. On the flipside, many headless CMS platforms (like Contentful, for example) don’t allow for customization.

Budget Considerations

There are three major areas to consider in planning your budget: hosting costs, feature enhancements, and ongoing maintenance and support. All of these are affected by the size and scope of your migration and the nature of your internal development team.

Key things to consider:

- Will the newly proposed architecture be supported by existing hosting? Will you need one vendor or several (if you’re using microservices)?

- If you take advantage of low or no-cost options for hosting, you could put the cost savings toward microservices for feature enhancements.

- Given Drupal 9’s platform requirements, you’ll need to factor in the development costs of upgrading your platform infrastructure.

- Will your new platform need regular updates? How often might you add feature enhancements and performance optimizations? And do you have an internal dev team to handle the work?

Look on the Bright Side

Whatever path you choose, transitioning from Drupal 7 to a new CMS is no small task. But there’s a bright side, if you view it as an opportunity. A lot has changed on the web since Drupal 7 was launched! This is a great time to explore how you can update and enhance your platform user experience.

Re-platforming is a complex process, and it’s one that we love guiding clients through. By partnering with an experienced Drupal development firm, most migrations can be planned and implemented more quickly, with better outcomes for your brand and your audience.

From organizing the project, to vendor and service selection, to budget management, we’ve helped a number of organizations bring their platforms into the modern web. To see an example, check out this design update for the American Veterinary Medical Association.

Need help figuring out the best next step for your Drupal 7 platform? Contact us today for a free consultation.

While the terminology was first spotlighted by IBM back in 2014, the concept of a composable business has recently gained much traction, thanks in large part to the global pandemic. Today, organizations are combining more agile business models with flexible digital architecture, to adapt to the ever-evolving needs of their company and their customers.

Here’s a high-level look at building a composable business.

What is a Composable Business?

The term “composable” encompasses a mindset, technology, and processes that enable organizations to innovate and adapt quickly to changing business needs.

A composable business is like a collection of interchangeable building blocks (think: Lego) that can be added, rearranged, and jettisoned as needed. Compare that with an inflexible, monolithic organization that’s slow and difficult to evolve (think: cinderblock). By assembling and reassembling various elements, composable businesses can respond quickly to market shifts.

Gartner offers four principles of composable business:

- Discovery: React faster by sensing when change is happening.

- Modularity: Achieve greater agility with interchangeable components.

- Orchestration: Mix and match business functions to respond to changing needs.

- Autonomy: Create greater resilience via independent business units.

These four principles shape the business architecture and technology that support composability. From structural capabilities to digital applications, composable businesses rely on tools for today and tomorrow.

So, how do you get there?

Start With a Composable Mindset…

A composable mindset involves thinking about what could happen in the future, predicting what your business may need, and designing a flexible architecture to meet those needs. Essentially, it’s about embracing a modular philosophy and preparing for multiple possible futures.

Where do you begin? Research by Gartner suggests the first step in transitioning to a composable enterprise is to define a longer-term vision of composability for your business. Ask forward-thinking questions, such as:

- How will the markets we operate in evolve over the next 3-5 years?

- How will the competitive landscape change in that time?

- How are the needs and expectations of our customers changing?

- What new business models or new markets might we pursue?

- What product, service, or process innovations would help us outpace competitors?

These kinds of questions provide insights into the market forces that will impact your business, helping you prepare for multiple futures. But you also need to adopt a modular philosophy, thinking about all the assets in your organization — every bit of data, every process, every application — as the building blocks of your composable business.

…Then Leverage Composable Technology

A long-term vision helps create purpose and structure for a composable business. Technology is the tools that bring it to life. Composable technology begets sustainable business architectures, ready to address the challenges of the future, not the past.

For many organizations, the shift to composability means evolving from an inflexible, monolithic digital architecture to a modular application portfolio. The portfolio is made up of packaged business capabilities, or PBCs, which form the foundation of composable technology.

The ABCs of PBCs

PBCs are software components that provide specific business capabilities. Although similar in some respects to microservices, PBCs address more than technological needs. While a specific application may leverage a microservice to provide a feature, when that feature represents a business capability beyond just the application at hand, it is a PBC.

Because PBCs can be curated, assembled, and reassembled as needed, you can adapt your technology practically at the pace of business change. You can also experiment with different services, shed things that aren’t working, and plug in new options without disrupting your entire ecosystem.

When building an application portfolio with PBCs, the key is to identify the capabilities your business needs to be flexible and resilient. What are the foundational elements of your long-term vision? Your target architecture should drive the business outcomes that support your strategic goals.

Build or Buy?

PBCs can either be developed internally or sourced from third parties. Vendors may include traditional packaged-software vendors and nontraditional parties, such as global service integrators or financial services companies.

When deciding whether to build or buy a PBC, consider whether your target capability is unique to your business. For example, a CMS is something many businesses need, and thus it’s a readily available PBC that can be more cost-effective to buy. But if, through vendor selection, you find that your particular needs are unique, you may want to invest in building your own.

Real-World Example

While building a new member retention platform for a large health insurer, we discovered a need to quickly look up member status during the onboarding process. Because the company had a unique way of identifying members, it required building custom software.

Although initially conceived in the context of the platform being created, a composable mindset led to the development of a standalone, API-first service — a true PBC providing member lookup capability to applications across the organization, and waiting to serve the applications of the future.

A Final Word

Disruption is here to stay. While you can’t predict every major shift, innovation, or crisis that will impact your organization, you can (almost) future-proof your business with a composabile approach.

Start with the mindset, lay out a roadmap, and then design a step-by-step program for digital transformation. The beauty of an API-led approach is that you can slowly but surely transform your technology, piece by piece.

If you’re interested in exploring a shift to composability, we’d love to help. Contact us today to talk about your options.

Why are microservices growing in popularity for enterprise-level platforms? For many organizations, a microservice architecture provides a faster and more flexible way to leverage technology to meet evolving business needs. For some leaders, microservices better reflect how they want to structure their teams and processes.

But are microservices the best fit for you?

We’re hearing this question more and more from platform owners across multiple industries as software monoliths become increasingly impractical in today’s fast-paced competitive landscape. However, while microservices offer the agility and flexibility that many organizations are looking for, they’re not right for everyone.

In this article, we’ll cover key factors in deciding whether microservices architecture is the right choice for your platform.

What’s the Difference Between Microservices and Monoliths?

Microservices architecture emerged roughly a decade ago to address the primary limitations of monolithic applications: scale, flexibility, and speed.

Microservices are small, separately deployable, software units that together form a single, larger application. Specific functions are carried out by individual services. For example, if your platform allows users to log in to an account, search for products, and pay online, those functions could be delivered as separate microservices and served up through one user interface (UI).

In monolithic architecture, all of the functions and UI are interconnected in a single, self-contained application. All code is traditionally written in one language and housed in a single codebase, and all functions rely on shared data libraries.

Essentially, with most off-the-shelf monoliths, you get what you get. It may do everything, but not be particularly great at anything. With microservices, by contrast, you can build or cherry-pick optimal applications from the best a given industry has to offer.

Because of their modular nature, microservices make it easier to deploy new functions, scale individual services, and isolate and fix problems. On the other hand, with less complexity and fewer moving parts, monoliths can be cheaper and easier to develop and manage.

So which one is better? As with most things technological, it depends on many factors. Let’s take a look at the benefits and drawbacks of microservices.

Advantages of Microservices Architecture

Companies that embrace microservices see it as a cleaner, faster, and more efficient approach to meeting business needs, such as managing a growing user base, expanding feature sets, and deploying solutions quickly. In fact, there are a number of ways in which microservices beat out monoliths for speed, scale, and agility.

Shorter time to market

Large monolithic applications can take a long time to develop and deploy, anywhere from months to years. That could leave you lagging behind your competitors’ product releases or struggling to respond quickly to user feedback.

By leveraging third-party microservices rather than building your own applications from scratch, you can drastically reduce time to market. And, because the services are compartmentalized, they can be built and deployed independently by smaller, dedicated teams working simultaneously. You also have greater flexibility in finding the right tools for the job: you can choose the best of breed for each service, regardless of technology stack.

Lastly, microservices facilitate the minimum viable product approach. Instead of deploying everything on your wishlist at once, you can roll out core services first and then release subsequent services later.

Faster feature releases

Any changes or updates to monoliths require redeploying the entire application. The bigger a monolith gets, the more time and effort is required for things like updates and new releases.

By contrast, because microservices are independently managed, dedicated teams can iterate at their own pace without disrupting others or taking down the entire system. This means you can deploy new features rapidly and continuously, with little to no risk of impacting other areas of the platform.

This added agility also lets you prioritize and manage feature requests from a business perspective, not a technology perspective. Technology shouldn’t prevent you from making changes that increase user engagement or drive revenue—it should enable those changes.

Affordable scalability

If you need to scale just one service in a monolithic architecture, you’ll have to scale and redeploy the entire application. This can get expensive, and you may not be able to scale in time to satisfy rising demand.

Microservices architecture offers not only greater speed and flexibility, but also potential savings in hosting costs, because you can independently scale any individual service that’s under load. You can also configure a single service to add capability automatically until load need is met, and then scale back to normal capacity.

More support for growth

With microservices architecture, you’re not limited to a UI that’s tethered to your back end. For growing organizations that are continually thinking ahead, this is one of the greatest benefits of microservices architecture.

In the past, websites and mobile apps had completely separate codebases, and launching a mobile app meant developing a whole new application. Today, you just need to develop a mobile UI and connect it to the same service as your website UI. Make updates to the service, and it works across everything.

You have complete control over the UI — what it looks like, how it functions for the customer, etc… You can also test and deploy upgrades without disrupting other services. And, as new forms of data access and usage emerge, you have readily available services that you can use for whatever application suits your needs. Digital signage, voice commands for Alexa… and whatever comes next.

Optimal programming options

Since monolithic applications are tightly coupled and developed with a single stack, all components typically share one programming language and framework. This means any future changes or additions are limited to the choices you make early on, which could cause delays or quality issues in future releases.

Because microservices are loosely coupled and independently deployed, it’s easier to manage diverse datasets and processing requirements. Developers can choose whatever language and storage solution is best suited for each service, without having to coordinate major development efforts with other teams.

Greater resilience

For complex platforms, fault tolerance and isolation are crucial advantages of microservices architecture. There’s less risk of system failure, and it’s easier and faster to fix problems.

In monolithic applications, even just one bug affecting one tiny part of a single feature can cause problems in an unrelated area—or crash the entire application. Any time you make a change to a monolithic application, it introduces risk. With microservices, if one service fails, it’s unlikely to bring others down with it. You’ll have reduced functionality in a specific capacity, not the whole system.

Microservices also make it easier to locate and isolate issues, because you can limit the search to a single software module. Whereas in monoliths, given the possible chain of faults, it’s hard to isolate the root cause of problems or predict the outcome of any changes to the codebase.

Monoliths thus make it difficult and time-consuming to recover from failures, especially since, once an issue has been isolated and resolved, you still have to rebuild and redeploy the entire application. Since microservices allow developers to fix problems or roll back buggy updates in just one service, you’ll see a shorter time to resolution.

Faster onboarding

With smaller, independent code bases, microservices make it faster and easier to onboard new team members. Unlike with monoliths, new developers don’t have to understand how every service works or all the interdependencies at play in the system.

This means you won’t have to scour the internet looking for candidates who can code in the only language you’re using, or spend time training them in all the details of your codebase. Chances are, you’ll find new hires more easily and put them to work faster.

Easier updates

As consumer expectations for digital experiences evolve over time, applications need to be updated or upgraded to meet them. Large monolithic applications are generally difficult, and expensive, to upgrade from one version to the next.

Because third-party app owners build and pay for their own updates, with microservices there’s no need to maintain or enhance every tool in your system. For instance, you get to let Stripe perfect its payment processing service while you leverage the new features. You don’t have to pay for future improvements, and you don’t need anyone on staff to be an expert in payment processing and security.

Disadvantages of Microservices Architecture

Do microservices win in every circumstance? Absolutely not. Monoliths can be a more cost-effective, less complicated, and less risky solution for many applications. Below are a few potential downsides of microservices.

Extra complexity

With more moving parts than monolithic applications, microservices may require additional effort, planning, and automation to ensure smooth deployment. Individual services must cooperate to create a working application, but the inherent separation between teams could make it difficult to create a cohesive end product.

Development teams may have to handle multiple programming languages and frameworks. And, with each service having its own database and data storage system, data consistency could be a challenge.

Also, when you choose to leverage numerous 3rd party services, this creates more network connections as well as more opportunities for latency and connectivity issues in your architecture.

Difficulty in monitoring

Given the complexity of microservices architecture and the interdependencies that may exist among applications, it’s more challenging to test and monitor the entire system. Each microservice requires individualized testing and monitoring.

You could build automated testing scripts to ensure individual applications are always up and running, but this adds time and complexity to system maintenance.

Added external risks

There are always risks when using third-party applications, in terms of both performance and security. The more microservices you employ, the more possible points of failure exist that you don’t directly control.

In addition, with multiple independent containers, you’re exposing more of your system to potential attackers. Those distributed services need to talk to one another, and a high number of inter-service network communications can create opportunities for outside entities to access your system.

On an upside, the containerized nature of microservices architecture prevents security threats in one service from compromising other system components. As we noted in the advantages section above, it’s also easier to track down the root cause of a security issue.

Potential culture changes

Microservices architecture usually works best in organizations that employ a DevOps-first approach, where independent clusters of development and operations teams work together across the lifecycle of an individual service. This structure can make teams more productive and agile in bringing solutions to market. But, at an organizational level, it requires a broader skill set for developing, deploying, and monitoring each individual application.

A DevOps-first culture also means decentralizing decision-making power, shifting it from project teams to a shared responsibility among teams and DevOps engineers. The goal is to ensure that a given microservice meets a solution’s technical requirements and can be supported in the architecture in terms of security, stability, auditing, etc…

3 Paths Toward Microservices Transformation

In general, there are three different approaches to developing a microservices architecture:

1. Deconstruct a monolith

This kind of approach is most common for large enterprise applications, and it can be a massive undertaking. Take Airbnb, for instance: several years ago, the company migrated from a monolith architecture to a service-oriented architecture incorporating microservices. Features such as search, reservations, messaging, and checkout were broken down into one or more individual services, enabling each service to be built, deployed, and scaled independently.

In most cases, it’s not just the monolith that becomes decentralized. Organizations will often break up their development group, creating smaller, independent teams that are responsible for developing, testing, and deploying individual applications.

2. Leverage PBCs

Packaged Business Capabilities, or PBCs, are essentially autonomous collections of microservices that deliver a specific business capability. This approach is often used to create best-of-breed solutions, where many services are third-party tools that talk to each other via APIs.

PBCs can stand alone or serve as the building blocks of larger app suites. Keep in mind, adding multiple microservices or packaged services can drive up costs as the complexity of integration increases.

3. Combine both types

Small monoliths can be a cost-effective solution for simple applications with limited feature sets. If that applies to your business, you may want to build a custom app with a monolithic architecture.

However, there are likely some services, such as payment processing, that you don’t want to have to build yourself. In that case, it often makes sense to build a monolith and incorporate a microservice for any features that would be too costly or complex to tackle in-house.

A Few Words of Caution

Even though they’re called “microservices”, be careful not to get too small. If you break services down into many tiny applications, you may end up creating an overly complex application with excessive overhead. Lots of micro-micro services can easily become too much to maintain over time, with too many teams and people managing different pieces of an application.

Given the added complexity and potential costs of microservices, for smaller platforms with only one UI it may be best to start with a monolithic application and slowly add microservices as you need them. Start at a high level and zoom in over time, looking for specific functions you can optimize to make you stand out.

Lastly, choose your third party services with care. It’s not just about the features; you also need to consider what the costs might look like if you need to scale a particular service.

Final Thoughts: Micro or Mono?

Still trying to decide which architecture is right for your platform? Here are some of the most common scenarios we encounter with clients:

- If time to market is the most important consideration, then leveraging 3rd party microservices is usually the fastest way to build out a platform or deliver new features.

- If some aspect of what you’re doing is custom, then consider starting with a monolith and either building custom services or using 3rd parties for areas that will help suit a particular need.

- If you don’t have a ton of money, and you need to get something up quick and dirty, then consider starting with a monolith and splitting it up later.

Here at Oomph, we understand that enterprise-level software is an enormous investment and a fundamental part of your business. Your choice of architecture can impact everything from overhead to operations. That’s why we take the time to understand your business goals, today and down the road, to help you choose the best fit for your needs.

We’d love to hear more about your vision for a digital platform. Contact us today to talk about how we can help.

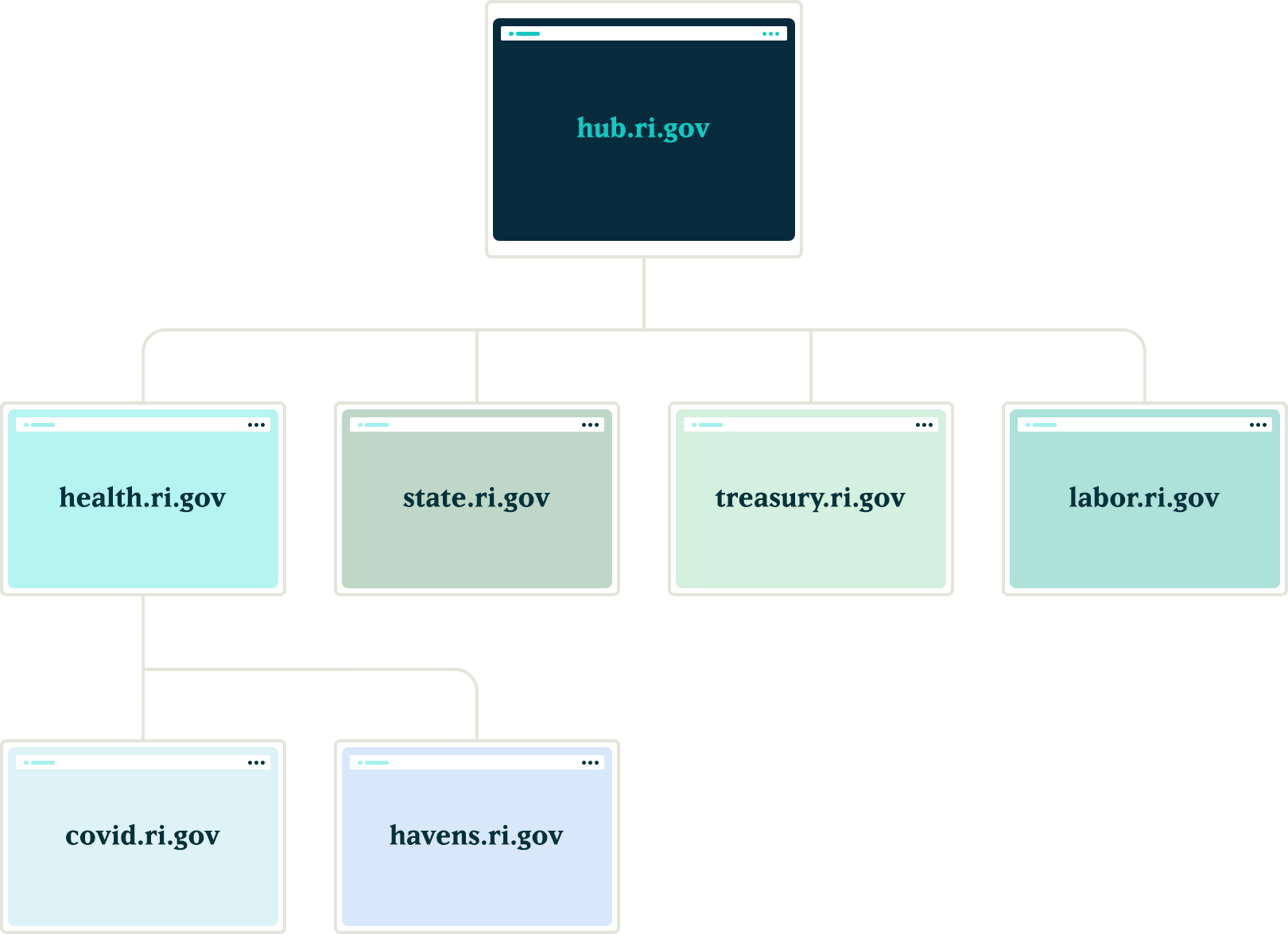

How we leveraged Drupal’s native API’s to push notifications to the many department websites for the State.RI.gov is a custom Drupal distribution that was built with the sole purpose of running hundreds of department websites for the state of Rhode Island. The platform leverages a design system for flexible page building, custom authoring permissions, and a series of custom tools to make authoring and distributing content across multiple sites more efficient.

Come work with us at Oomph!

VIEW OPEN POSITIONS

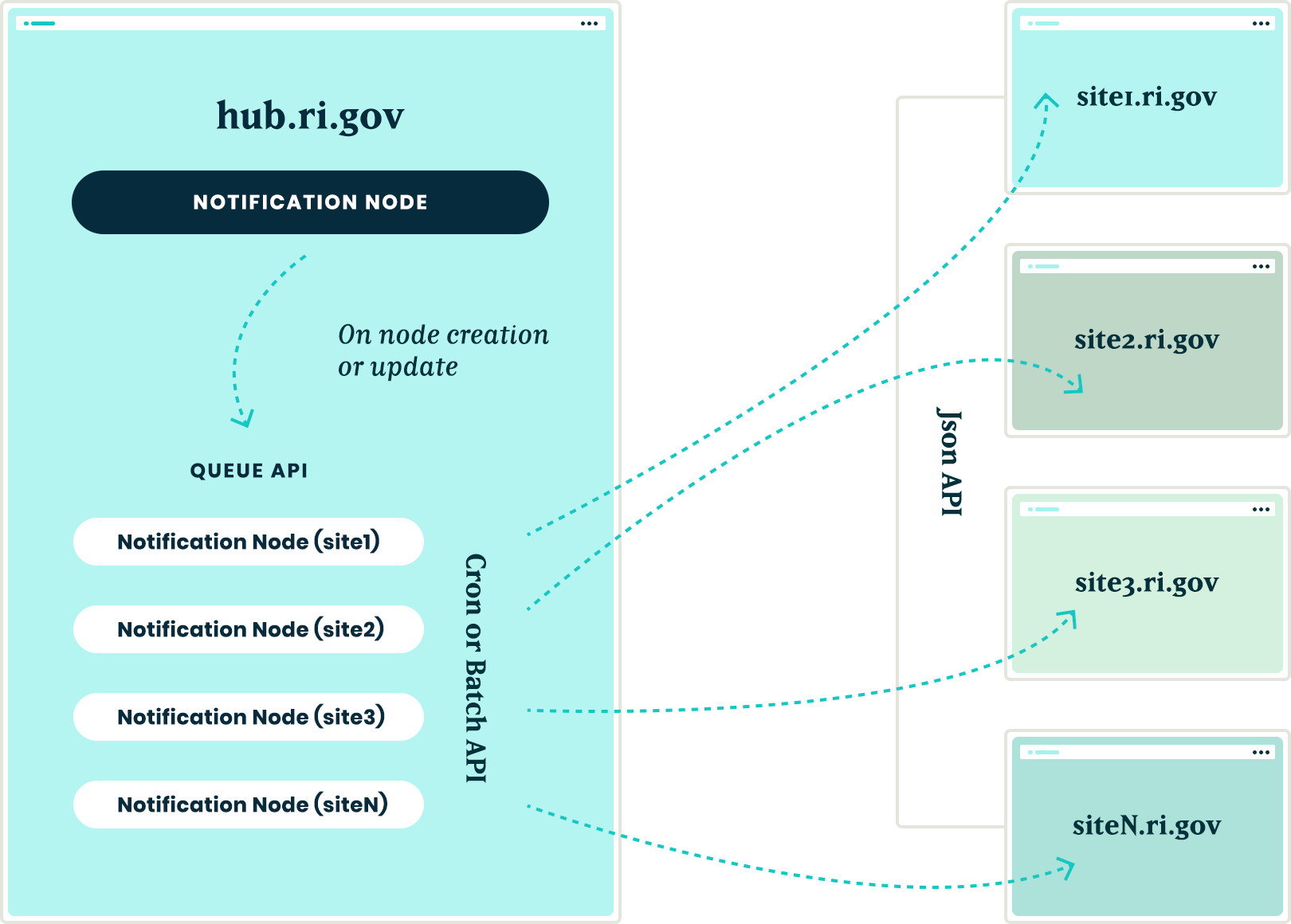

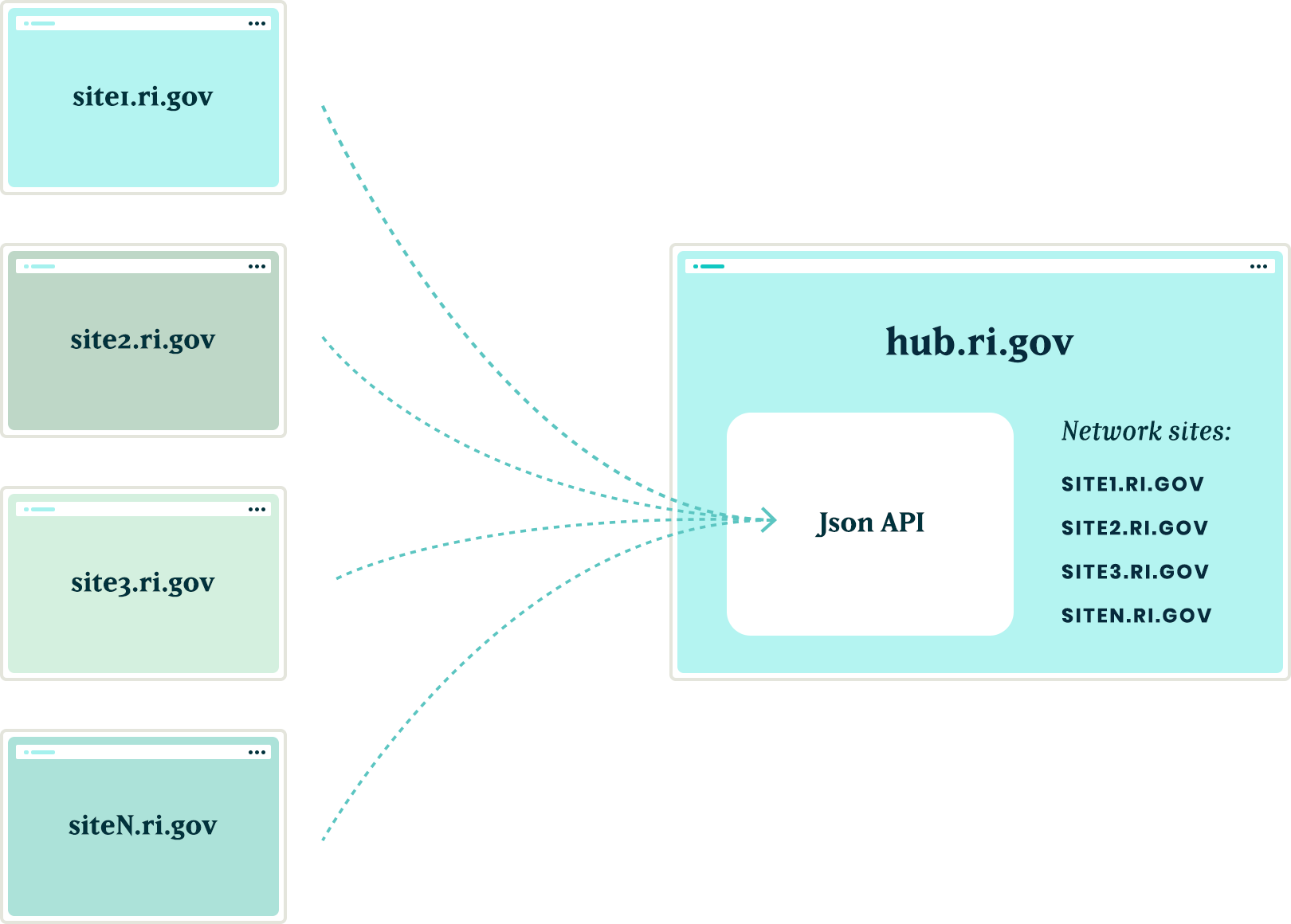

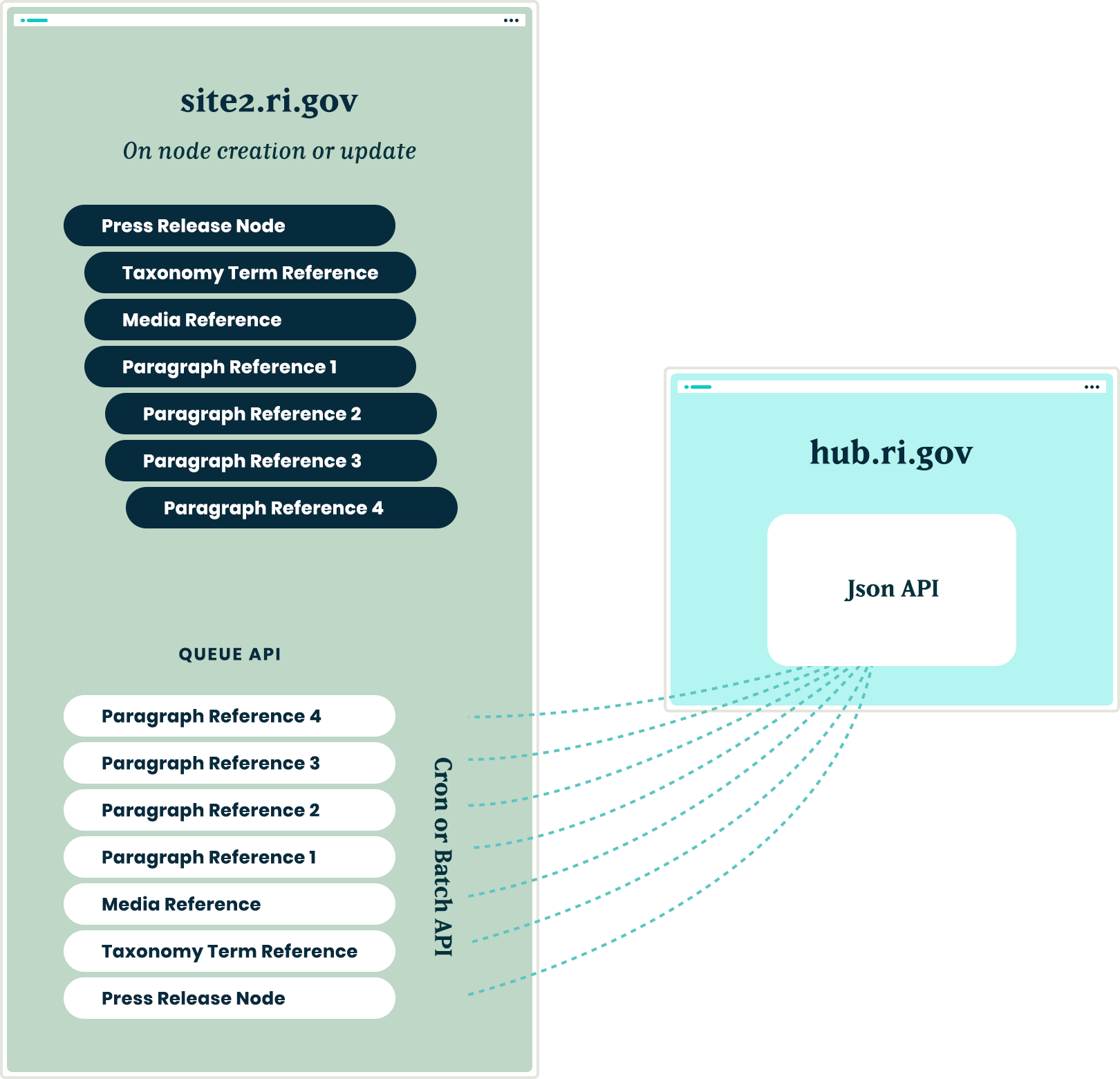

The Challenge

The platform had many business requirements, and one stated that a global notification needed to be published to all department sites in near real-time. These notifications would communicate important department information on all related sites. Further, these notifications needed to be ingested by the individual websites as local content to enable indexing them for search.

The hierarchy of the departments and their sites added a layer of complexity to this requirement. A department needs to create notifications that broadcast only to subsidiary sites, not the entire network. For example, the Department of Health might need to create a health department specific notification that would get pushed to the Covid site, the RIHavens site, and the RIDelivers sites — but not to an unrelated department, like DEM.

Exploration

Aggregator:

Our first idea was to utilize the built in Drupal aggregator module and pull notifications from the hub. A proof of concept proved that while it worked well for pulling content from the hub site, it had a few problems: